2019 Blogs, Blog

The number of enterprises which adopt Automation for their daily Operations is rapidly growing day by day. Automation is capable of revolutionizing the IT service delivery and support, provided it is executed with the right set of planning and focus. Proper planning and setting the right level of expectations will result in at least one of the primary benefits – Cost Reduction, Revenue Generation, Risk Mitigation, Quality Improvement. So, what are the checklist for starting Automation journey.

- What is your end goal?

- Do you have 4 primary benefits (Cost Reduction, Revenue Generation Risk Mitigation and Quality Improvement?

- Do you target one or more groups?

- Do you have COE groups focusing on Automation for the entire company?

- Are your task automated in IT jobs, Service Requests, DevOps etc?

- Do you have some sort of automation already in place?

- Have you already invested in tools and technologies to support Automation

Once you have these clearly defined, you can now plan for your automation journey. As in any other initiatives to transform what you have now, Automation also should be started by analysing the existing landscape and conditions.

- Current workload Analysis

Your current workload could be in terms of number of jobs, tickets, requests, calls etc. Collect as much as data as possible and group these into various categories. Most of the data would be non-standard but even an excel-based filtering and sorting will give a good idea about where your team is spending more effort and money. Go with the 80:20 rule to pick your candidates for immediate automation and design your Service Catalog around these. - Right Process Identification

Most analysis will result in revelations about incorrect or inappropriate processes being followed for most of the existing workflows. Brainstorm with the concerned team and define/standardize the process flows – including stakeholders involved, approvals required, exceptions to be handled. The Service Catalog and the Process flows will result in a more self-service centric IT delivery system. - Plan Execution

Start by segregating the automation candidates into- Start Small – Few cases may start showing immediate results in automation achieved. Start with one small area like Account Creation or Password Resets.

- Self-Serve Automation – Next focus can be shifted to the cases which needs a shift from generic incidents to self-service requests. Once the shift is done, these can be automated in the 2nd phase of your automation journey

- AI Based – These could be the cases which needs patterns to be analyzed from the collected data and intelligently handled in automation.

- Training & Communication Automation doesn’t yield the same results as it is intended to be unless used and in the right way. All the parties involved from the end-users to IT should be communicated upfront about the plan and adequate training to be part of the overall execution plan. The actual benefits of automation to be demonstrated to each group in terms of time or effort savings.

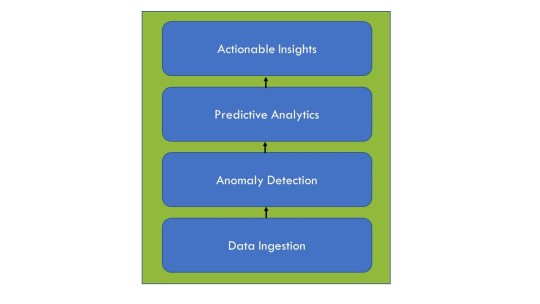

- Feedback and Improvements Automation is not a one-time project. It has to run in feedback cycles to find out more exceptions and to add those to the backlog. A systematic and regular audit of the automation results to be done to validate against the expected outputs. Organizations will adopt new technologies, tools and application periodically which can be part of automation scope in next phase. Relevance Lab’s AI driven Automation Platform comes in with pre-built library of Automation BOTs for mundane tasks in IT in areas like Identity & Access Management, Infrastructure Provisioning, DevOps, Monitoring & Remediation.

Please get in touch withmarketing@relevancelab.com for more details on starting your Automation journey.

About Author

Ashna Abbas is Director of Product Management at Relevance Lab.She is a Software professional with 12+ years of experience in product development and delivery.

RelevanceLab ITSM Automation DevOps