2021 Blog, Blog, Featured

AWS Marketplace is a high-potential delivery mechanism for the delivery of software and professional services. The main benefit for customers is that they get a single bill from AWS for all their Infrastructure and Software consumption. Also, since AWS is already on the approved vendor list for many enterprises, it makes it easier for enterprises to consume software also from the same vendor.

Relevance Lab has always considered AWS Marketplace as one of the important channels for the distribution of its software products. In 2020 we had listed our RLCatalyst 4.3.2 BOTs Server product on the AWS Marketplace as an AMI-based product that a customer could download and run in their AWS account. This year, RLCatalyst Research Gateway was listed on the AWS Marketplace as a Software as a Service (SaaS) product.

This blog details some of the steps that a customer needs to go through to consume this product from the AWS Marketplace.

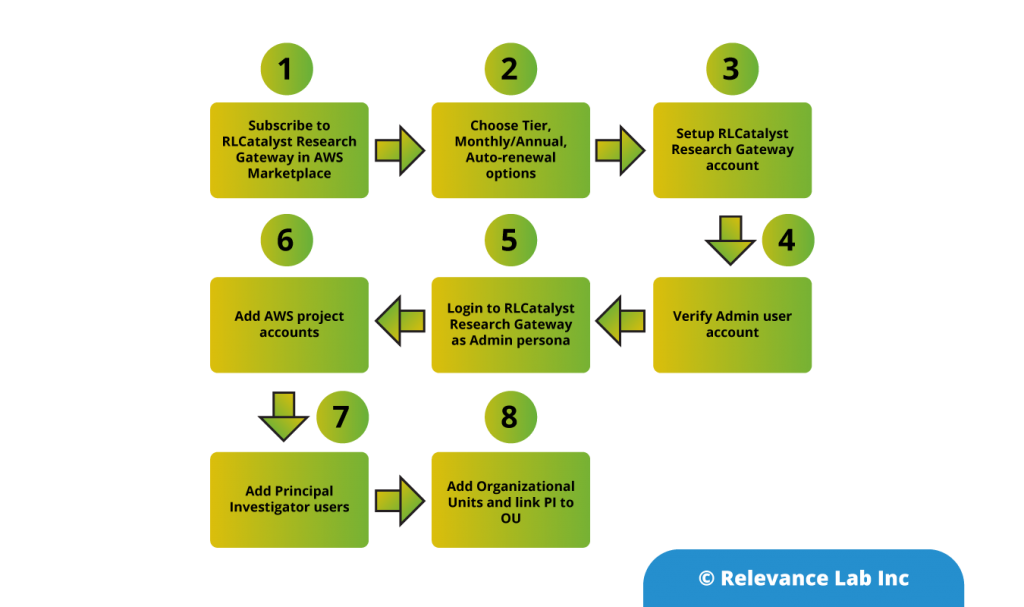

Step 1: The first step for a customer looking to find the product is to log in to their account and visit the AWS Marketplace. Then search for RLCatalyst Research Gateway. This will show the Research Gateway product at the top of the list in the results. Click on the link and this should lead to the details page.

The product details page lists the important details like.

- Pricing information

- Support information

- Set up instructions

Step-2: The second step for the user is to subscribe to the product by clicking on the “Continue to Subscribe” button. This step will need the user to login into their AWS account (if not done earlier). The page which comes up will show the contract options that the user can choose. RLCatalyst Research Gateway (SaaS) offers three tiers for subscription.

- Small tier (1-10 users)

- Medium tier (11-25 users)

- Large tier (unlimited users)

Also, the customer has the option of choosing a monthly contract or an annual contract. The monthly contract is good for customers who want to try the product or for those customers who would like a budget outflow that is spread over the year rather than a lump sum. The annual contract is good for customers who are already committed to using the product in the long term. An annual contract gets the customer an additional discount over the monthly price.

The customer also has to choose whether they want to contract to renew automatically or not.

One of the great features of AWS Marketplace is that the customer can modify the contract at any time and upgrade to a higher plan (e.g. Small tier to Medium or Large tier). The customer can also modify the contract to opt for auto-renewal at any time.

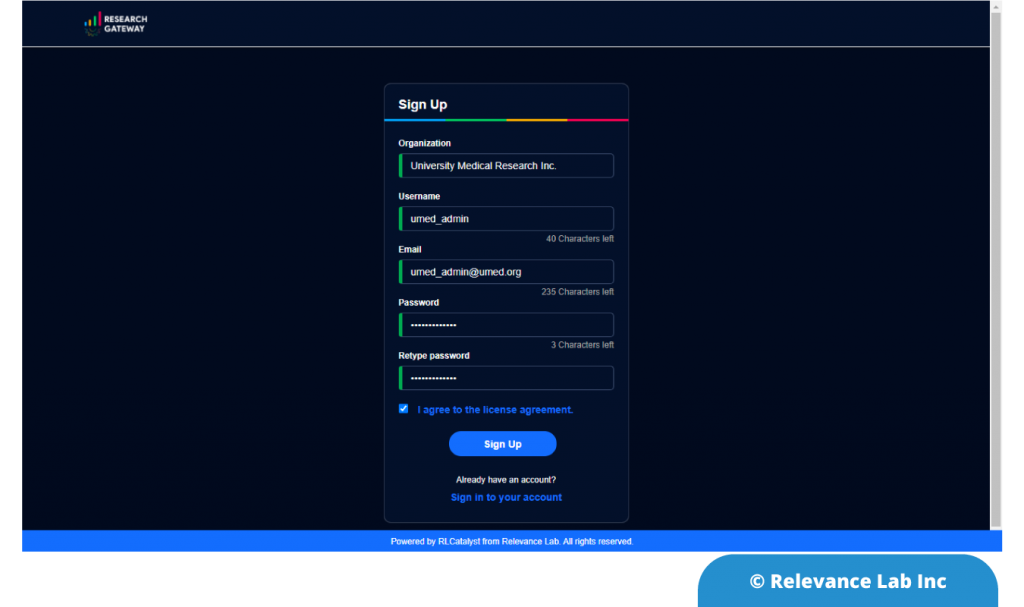

Step-3: The third step for the user is to click on the “Subscribe” button after choosing their contract options. This leads the user to the registration page where they can set up their RLCatalyst Research Gateway account.

This screen is meant for the Administrator persona to enter the details for the organization. Once the user enters the details, agrees to the End User License Agreement (EULA), and clicks on the Sign-up button, the process for provisioning the account is set in motion. The user should get an acknowledgment email within 12 hours and an email verification email within 24 hours.

Step-4: The user should verify their email account by clicking on the verification link in the email they receive from RLCatalyst Research Gateway.

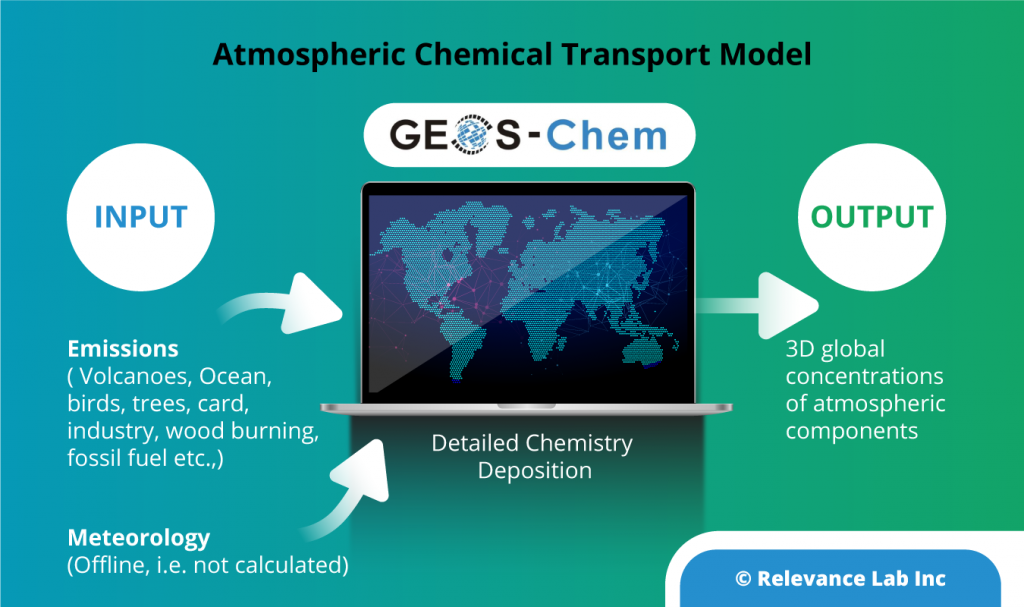

Step-5: Finally, the user will get a “Welcome” email with the details of their account including the custom URL for logging into his RLCatalyst Research Gateway account. The user is now ready to login into the portal. On logging in to the portal the user will see a Welcome screen.

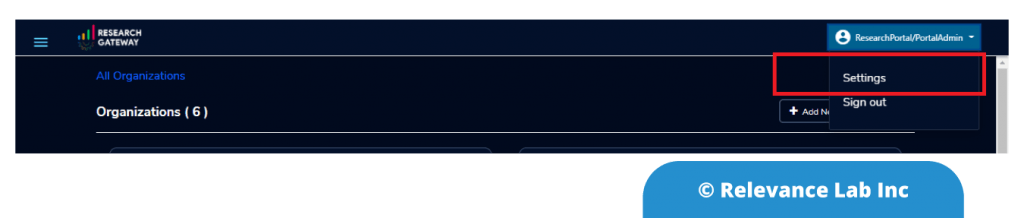

Step-6: The user can now set up their first Organizational Unit in the RLCatalyst Research Gateway portal by following these steps.

6.1 Navigate to settings from the menu at the top right.

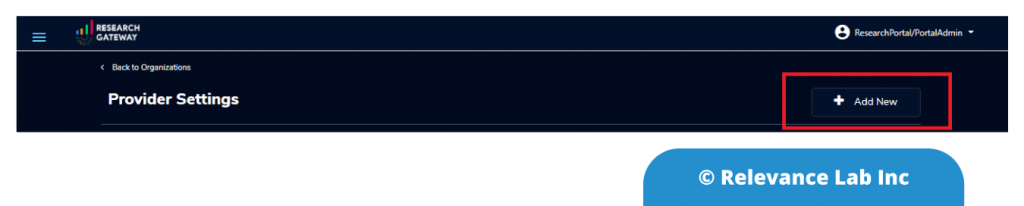

6.2 Click on the “Add New” button to add an AWS account.

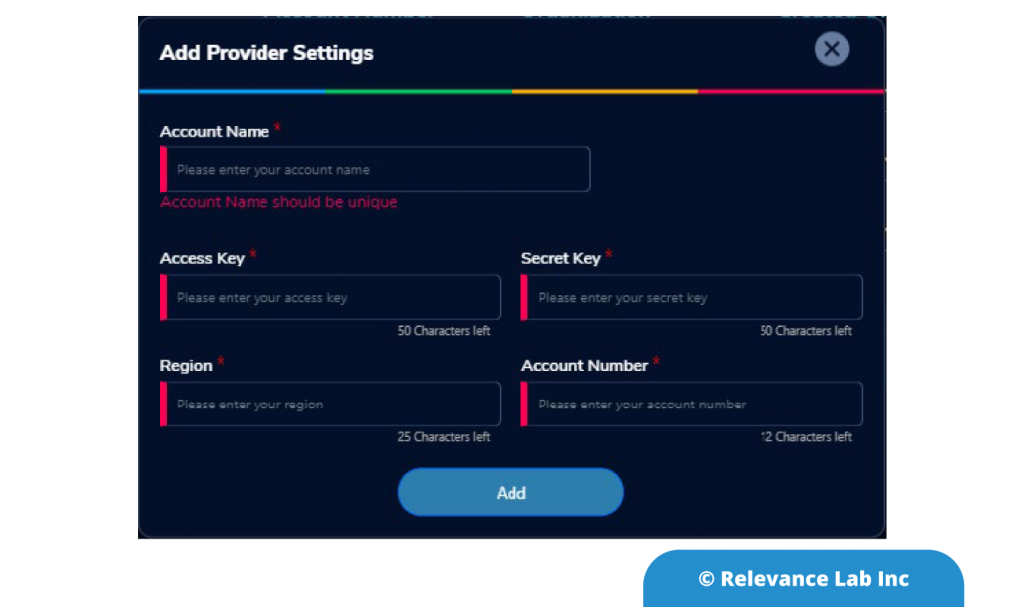

6.3 Enter the details of the AWS account.

Note that the account name given in this screen is any name that will help the Administrator to remember which OU and project this account is meant for.

6.4 The Administrator can repeat the procedure to add more than one project (consumption) account.

Step-7: Next the Administrator needs to add Principal Investigator users to the account. For this, he should contact the support team either by email (rlc.support@relevancelab.com) or by visiting the support portal (https://serviceone.relevancelab.com).

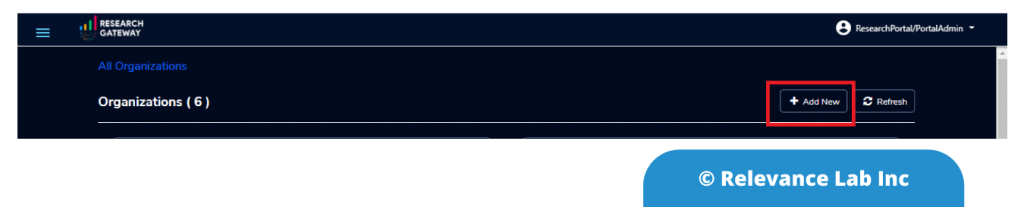

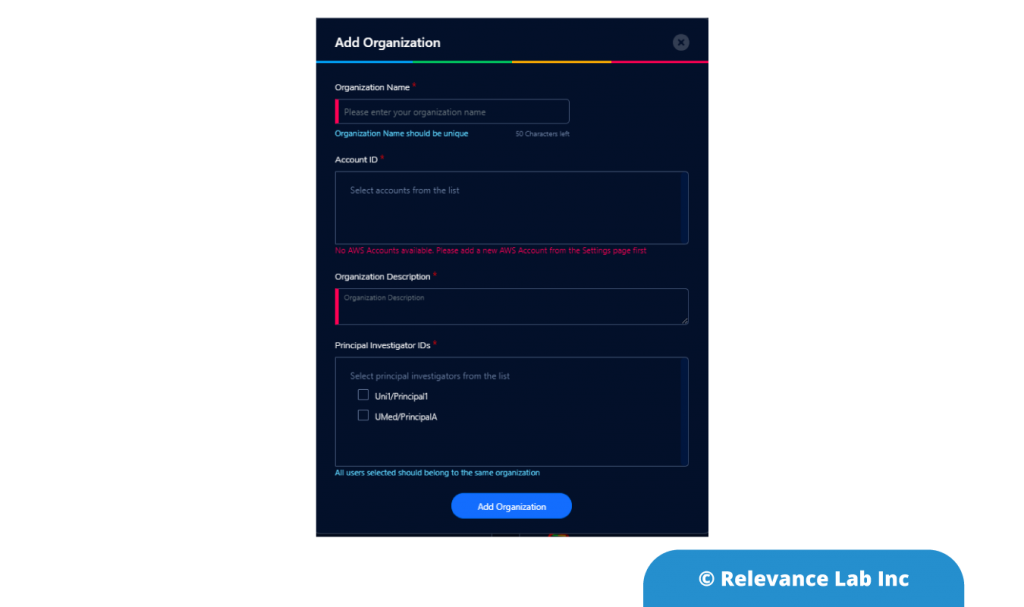

Step-8: The final step to set up an OU is to click on the “Add New” button on the Organizations page.

8.1 The Administrator should give a friendly name to the Organization in the “Organization Name” field. Then he should choose all the Accounts that will be consumed by projects in this account. A friendly description should be entered in the “Organization Description” field. Finally, choose a Principal Investigator who will manage/own this Organization Unit. Click “Add Organization” to add this OU.

Summary

As you can see above, ordering RLCatalyst Research Gateway (SaaS) from the AWS Marketplace makes it extremely easy for the user to get started, and end-users can start using the product within no time. Given the SaaS model, the customer does not need to worry about setting up the software in their account. At the same time, using their AWS account for the projects gives them complete transparency into the budget consumption.

In our next blog, we will provide step by step details of adding organizational units, projects & users to complete the next part of setup.

To learn more about AWS Marketplace installation click here.

If you want to learn more about the product or book a live demo, feel free to contact marketing@relevancelab.com.