2023 Blog, AWS Platform, Blog, Featured

Relevance Lab (RL) is a specialist company in helping customers adopt cloud “The Right Way” by focusing on an “Automation-First” and DevOps strategy. It covers the full lifecycle of migration, governance, security, monitoring, ITSM integration, app modernization, and DevOps maturity. Leveraging a combination of services and products for cloud adoption, we help customers on a “Plan-Build-Run” transformation that drives greater velocity of product innovation, global deployment scale, and cost optimization for new generation technology (SaaS) and enterprise companies.

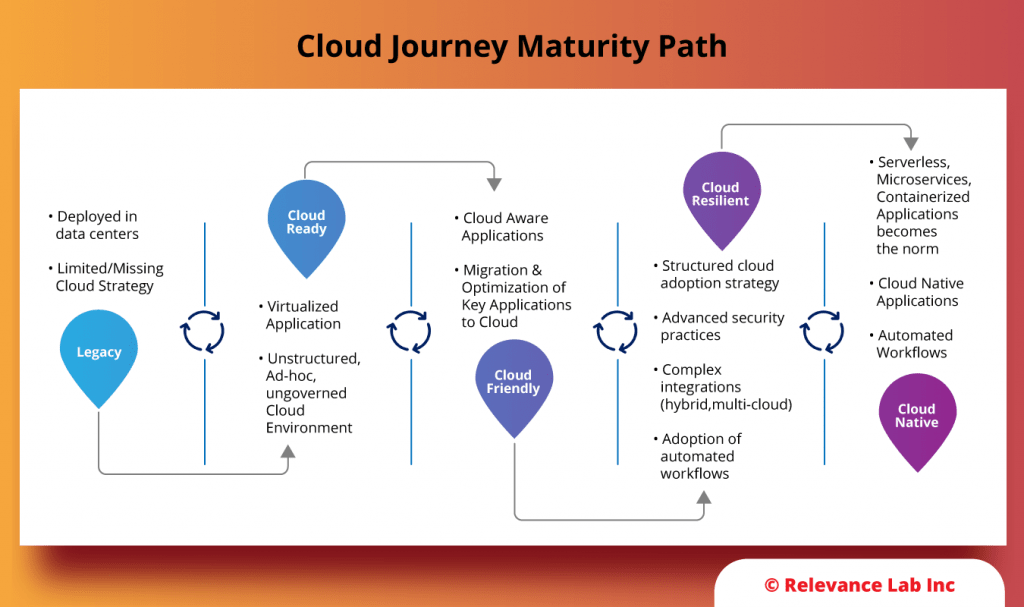

In this blog, we will cover some common themes that we have been using to help our customers for cloud adoption as part of their maturity journey.

- SaaS with multi-tenant architecture

- Multi-Account Cloud Management for AWS

- Microservices architecture with Docker and Kubernetes (AWS EKS)

- Jenkins for CI/CD pipelines and focus on cloud agnostic tools

- AWS Control Tower for Cloud Management & Governance solution (policy, security & governance)

- DevOps maturity models

- Cost optimization, agility, and automation needs

- Standardization for M&A (Merger & Acquisitions) integrations and scale with multiple cloud provider management

- Spectrum of AWS governance for optimum utilization, robust security, and reduction of budget

- Automation/BOT landscape, how different strategies are appropriate at different levels, and the industry best practice adoption for the same

- Reference enterprise strategy for structuring DevOps for engineering environment which has cloud native development and the products which are SaaS-based.

Relevance Lab Cloud and DevOps Credentials at a Glance

- RL has been a cloud, DevOps, and automation specialist since inception in 2011 (10+ years)

- Implemented 50+ successful customer cloud projects covering Plan-Build-Run lifecycle

- Globally has 250+ cloud specialists with 100+ certifications

- Cloud competencies cover infra, apps, data, and consulting

- Provide deep consulting and technology in cloud and DevOps

- RL products available on AWS and ServiceNow marketplace recognized globally as a specialist in “Infrastructure Automation”

- Deep Architecture know-how on DevOps with microservices, containers, Well-Architected principals

- Large enterprise customers with 10+M$ multi-year engagements successfully managed

- Actively managing 7000+ cloud instances, 300+ Applications, annual 5.0+M$ cloud consumption, 20K+ annual tickets, 100+ automation BOTs, etc.

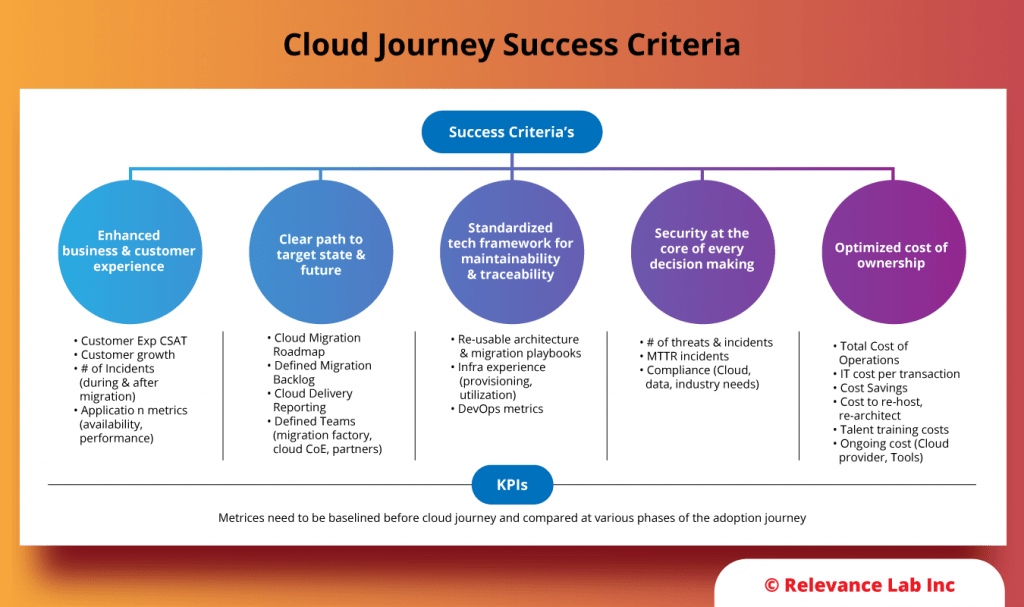

Need for a Comprehensive Approach to Cloud Adoption

Most enterprises today have their applications in the cloud or are aggressively migrating new ones for achieving the digital transformation of their business. However, the approach requires customers to think about the “Day-After” Cloud in order to avoid surprises on costs, security, and additional operations complexities. Having the right Cloud Management not only helps eliminate unwanted costs and compliance, but it also helps in optimal use of resources, ensuring “The Right Way” to use the cloud. Our “Automation- First Approach” helps minimize the manual intervention thereby, reducing manual prone errors and costs.

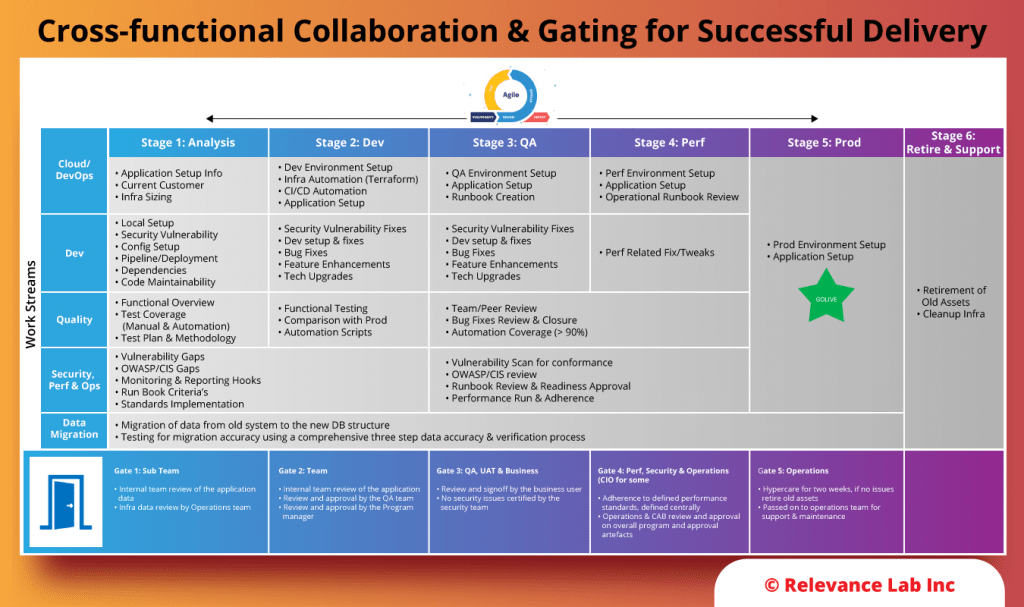

RL’s matured DevOps framework helps in ensuring the application development is done with accuracy, agility, and scale. Finally, to ensure this whole framework of Cloud Management, Automation and DevOps are continued in a seamless manner, you would need the right AIOps-driven Service Delivery Model.

Hence, for any matured organizations, the below 4 themes become the foundation for using Cloud Management, Automation, DevOps, and AIOps.

Cloud Management

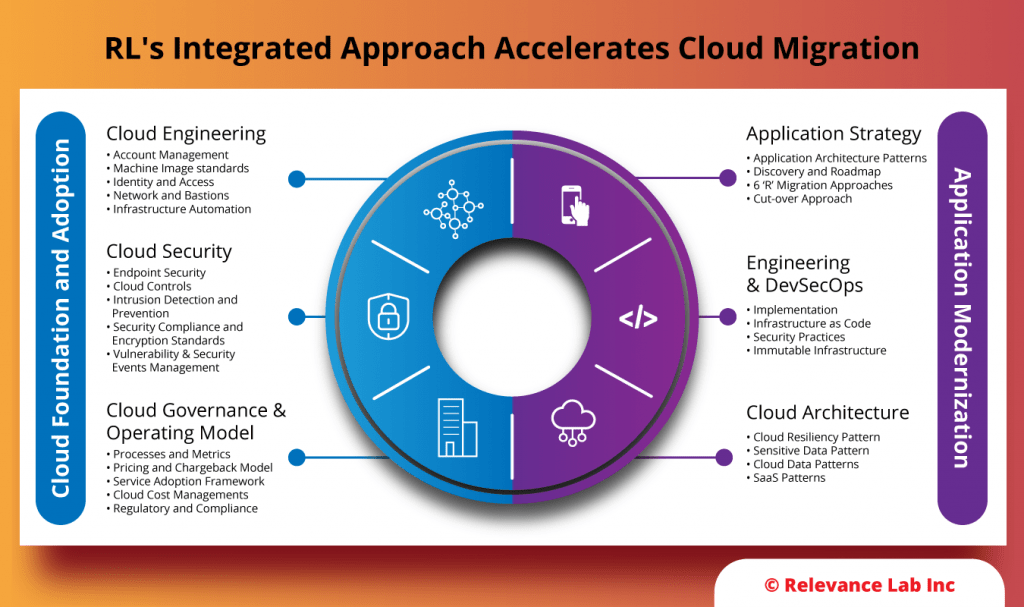

RL offers a unique methodology covering Plan-Build-Run lifecycle for Cloud Management, as explained in the diagram below.

Following are the basic steps for Cloud Management:

Step-1: Leverage

Built on best practices offered from native cloud providers and popular solution frameworks, RL methodology leverages the following for Cloud Management:

- AWS Well-Architected Framework

- AWS Management & Governance Lens

- AWS Control Tower for large scale multi-account management

- AWS Service Catalog for template-driven organization standard product deployments

- Terraform for Infra as Code automation

- AWS CloudFormation Templates

- AWS Security Hub

Step-2: Augment

The basic Cloud Management best practices are augmented with unique products & frameworks built by RL based on our 50+ successful customer implementations covering the following:

- Quickstart automation templates

- AppInsights and ServiceOne – built on ITSM

- RLCatalyst cloud portals – built on Service Catalog

- Governance360 – built on Control Tower

- RLCatalyst BOTS Automation Server

Step-3: Instill

Instill ongoing maturity and optimization using the following themes:

- Four level compliance maturity model

- Key Organization metrics across assets, cost, health, governance, and compliance

- Industry-proven methodologies like HIPAA, SOC2, GDPR, NIST, etc.

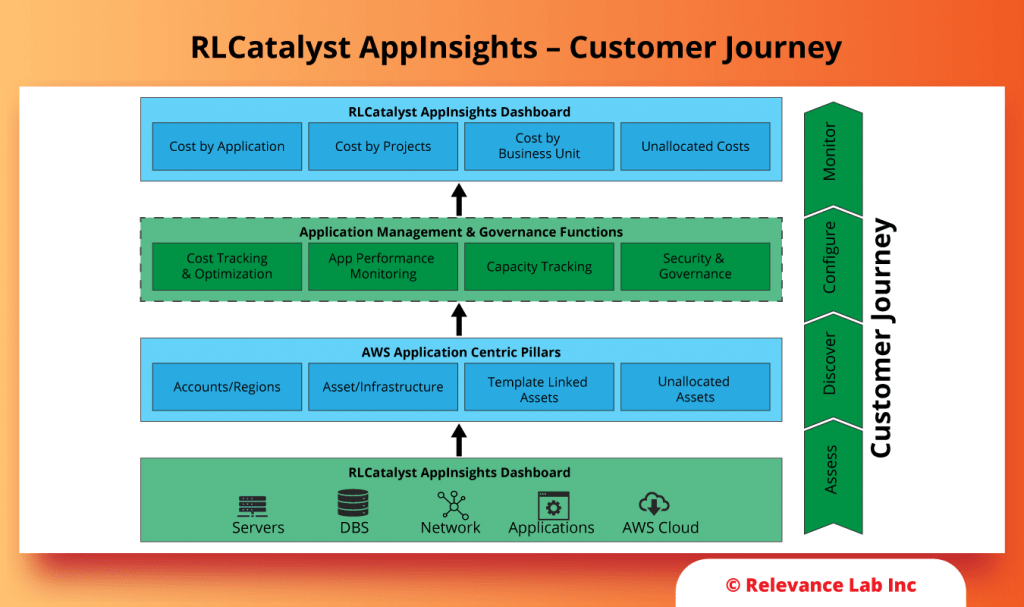

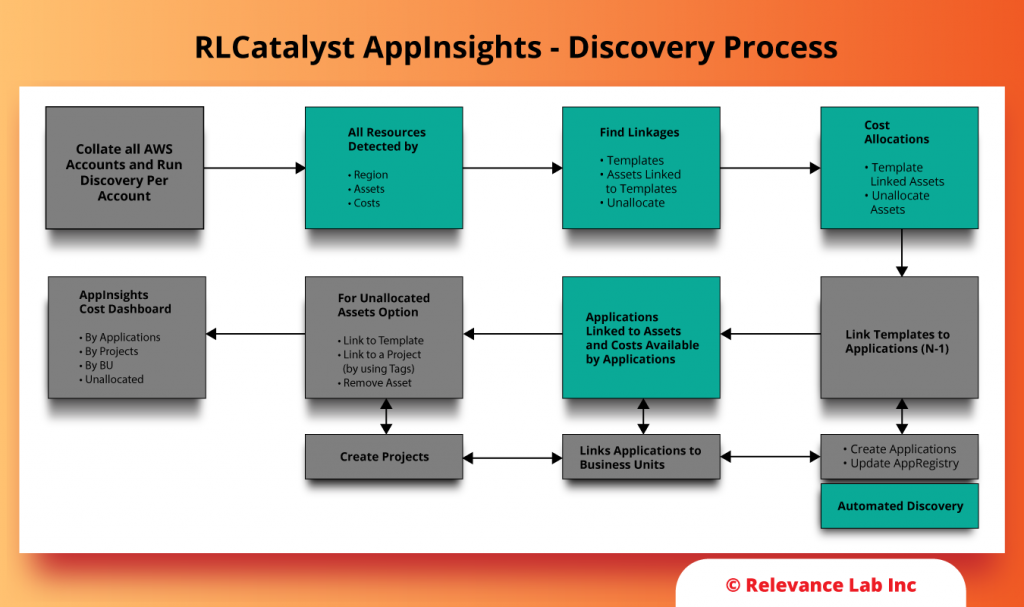

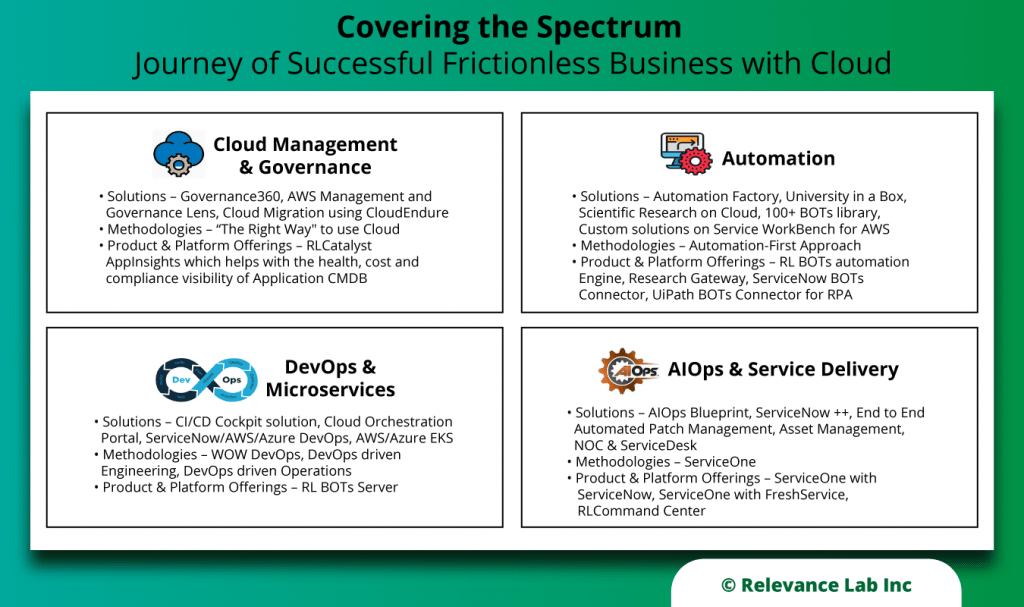

For Cloud Management and Governance, RL has Solutions like Governance360, AWS Management and Governance lens, Cloud Migration using CloudEndure. Similarly, we have methodologies like “The Right Way” to use the cloud, and finally Product & Platform offerings like RLCatalyst AppInsights.

Automation

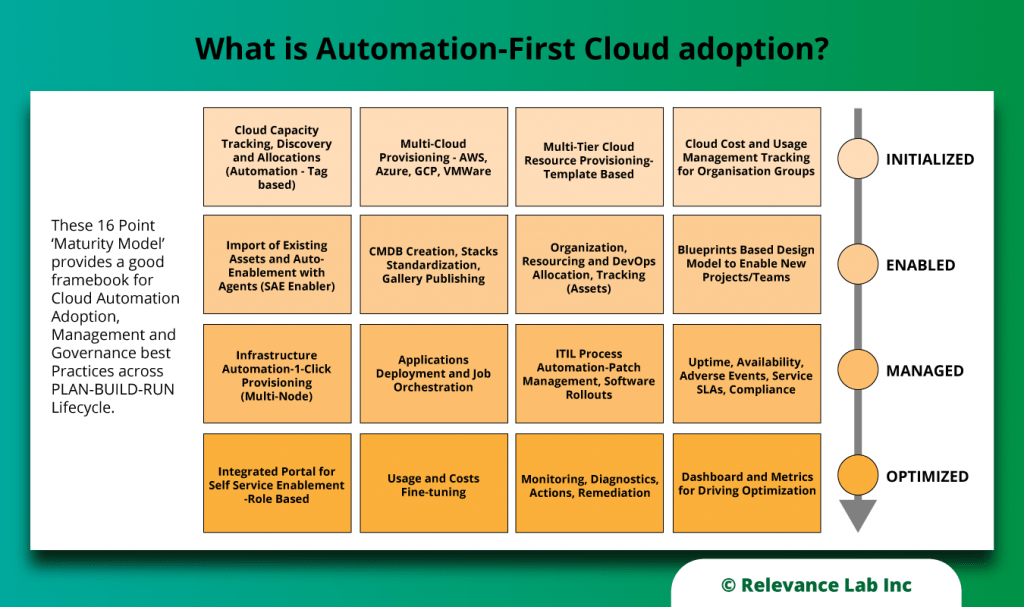

RL promotes an “Automation-First” approach for cloud adoption, covering all stages of the Plan-Build-Run lifecycle. We offer a mature automation framework called RLCatalyst BOTs and self-service cloud portals that allow full lifecycle automation.

In terms of deciding how to get started with automation, we help with an initial assessment model on “What Can Be Automated” (WCBA) that analyses the existing setup of cloud assets, applications portfolio, IT service management tickets (previous 12 months), and Governance/Security/Compliance models.

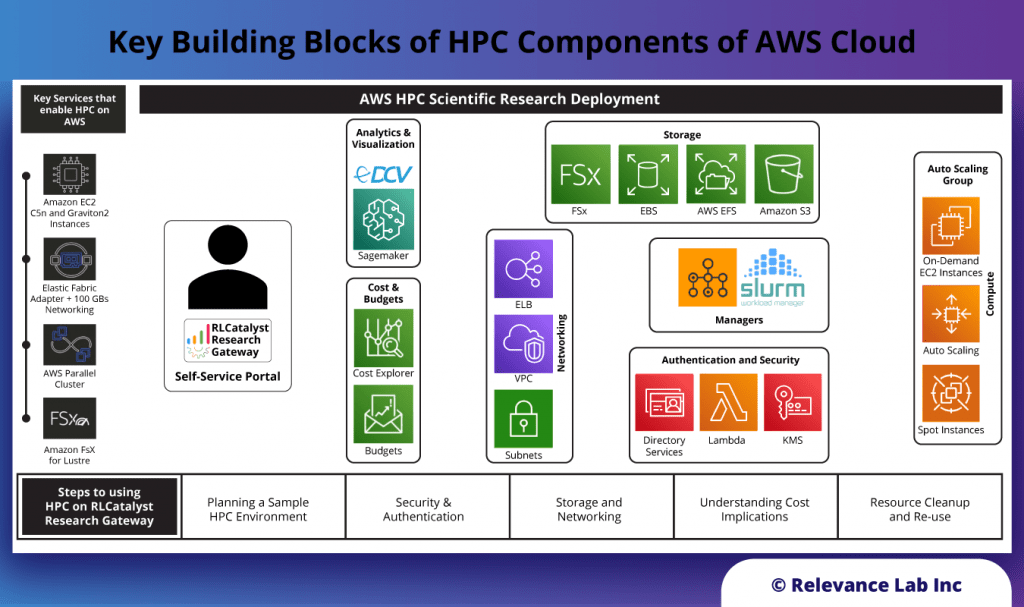

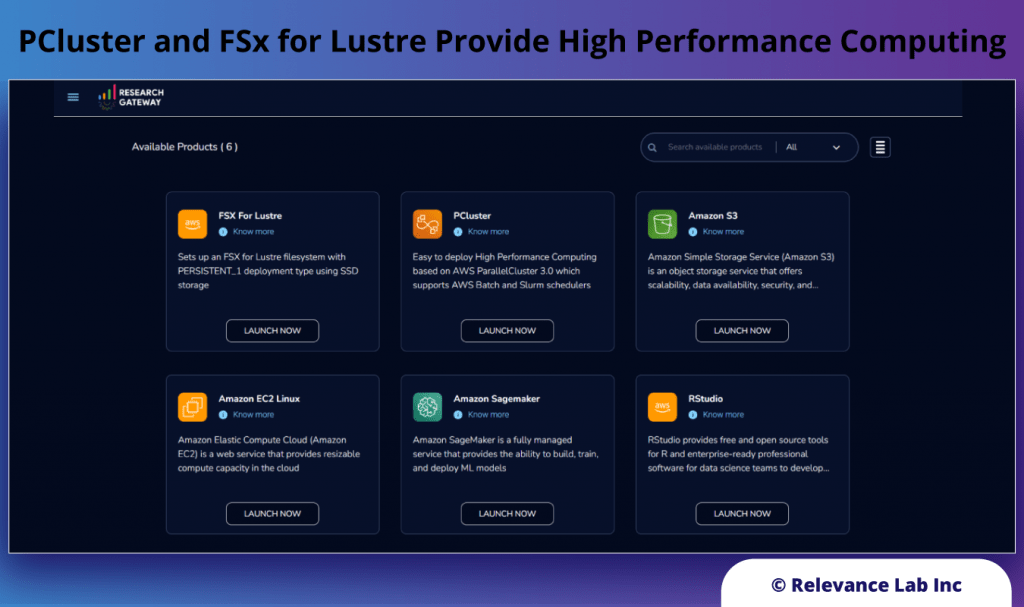

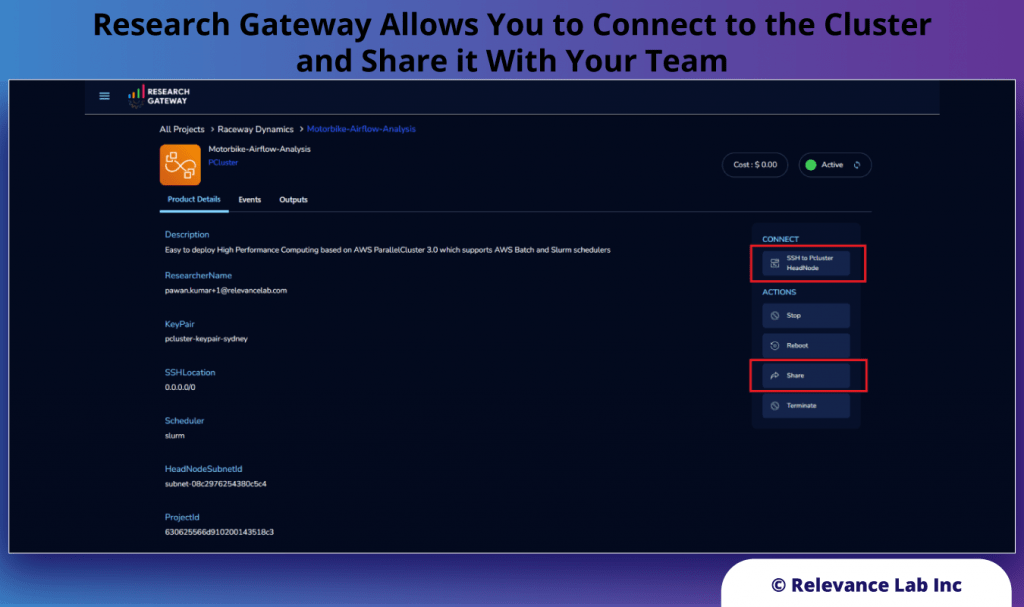

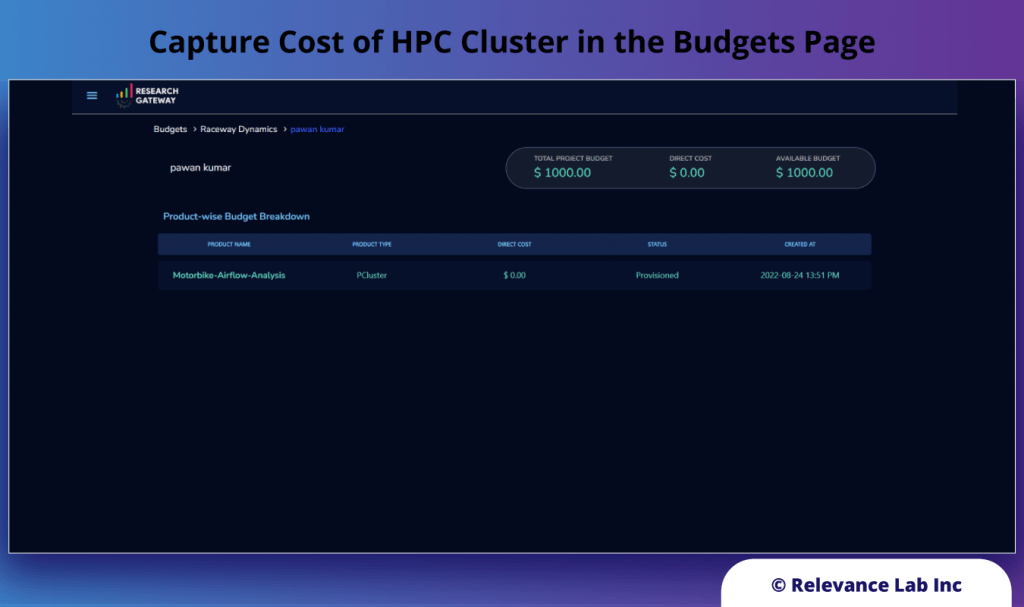

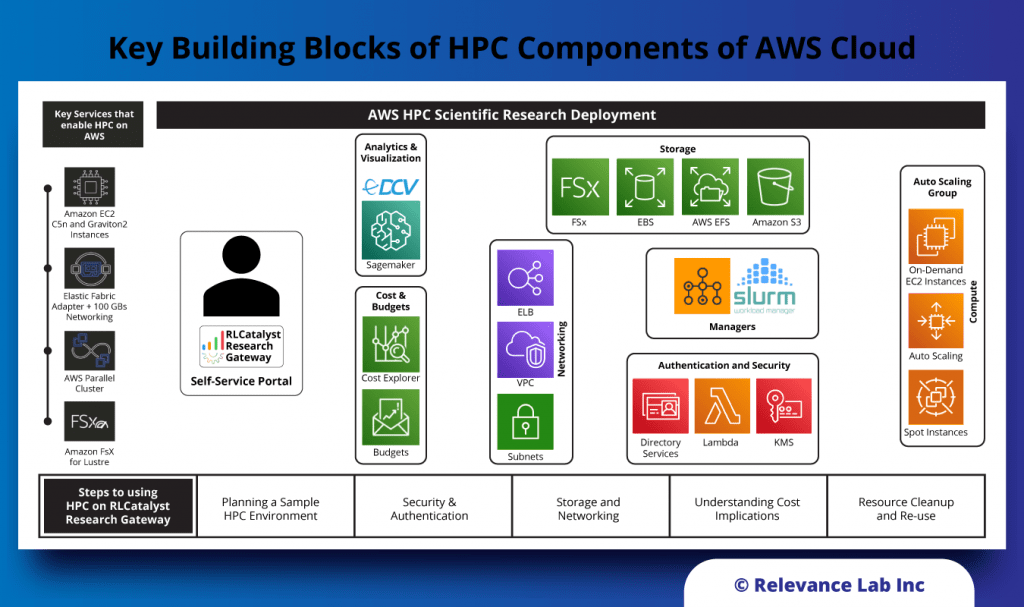

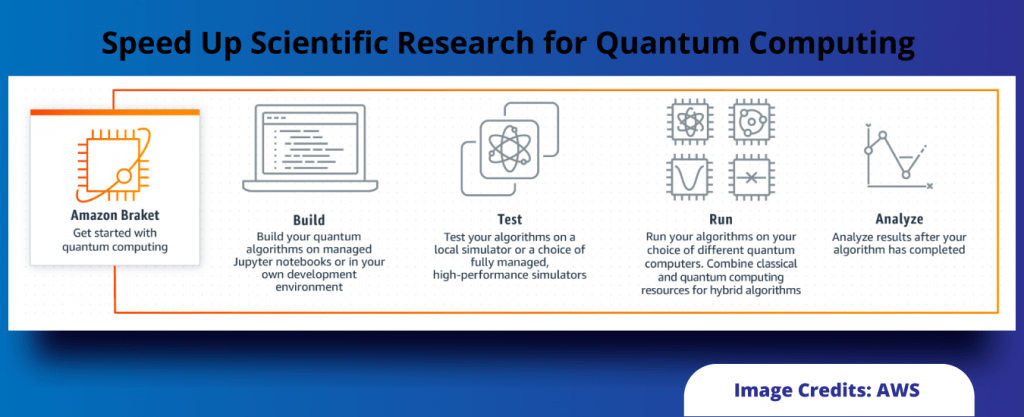

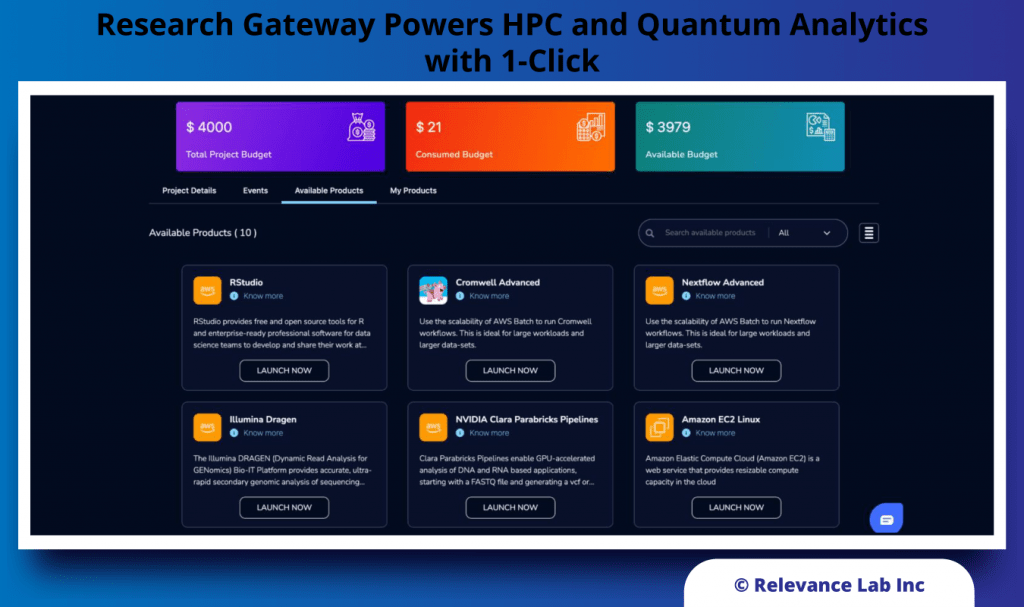

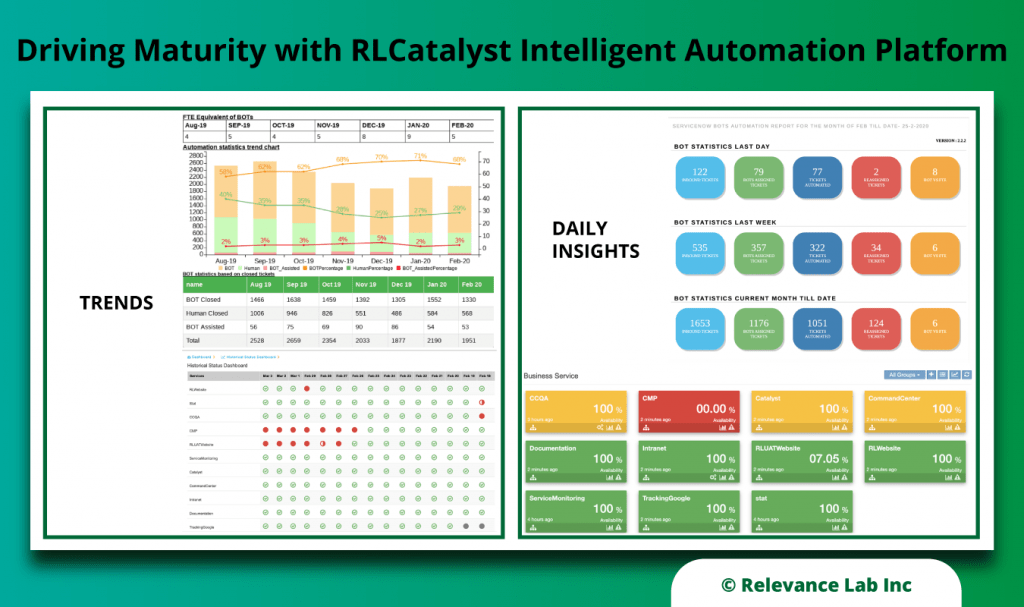

For the Automation theme, RL has Solutions like Automation Factory, University in a Box, Scientific Research on Cloud, 100+ BOTs library, custom solutions on Service WorkBench for AWS. Similarly, we have methodologies like Automation-First Approach, and finally Product & Platform offerings like RL BOTs automation Engine, Research Gateway, ServiceNow BOTs Connector, UiPath BOTs connector for RPA.

The following blogs explain in more detail our offerings on automation.

- Building an Automation Factory

- Intelligent Automation with Pre-built Library of re-usable BOTs

- Automated Patch Management

- Intelligent Automation with RPA

- Automation Led Cloud Migration

- Automate Complex IT/HR processes like User Onboarding & Offboarding

- Automation helps in the time of crisis like COVID-19

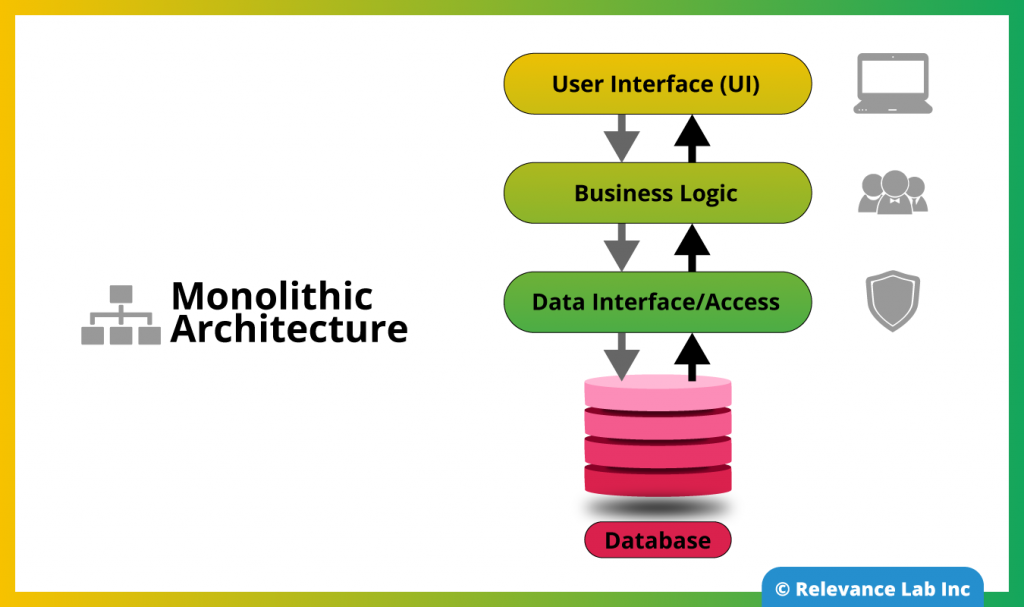

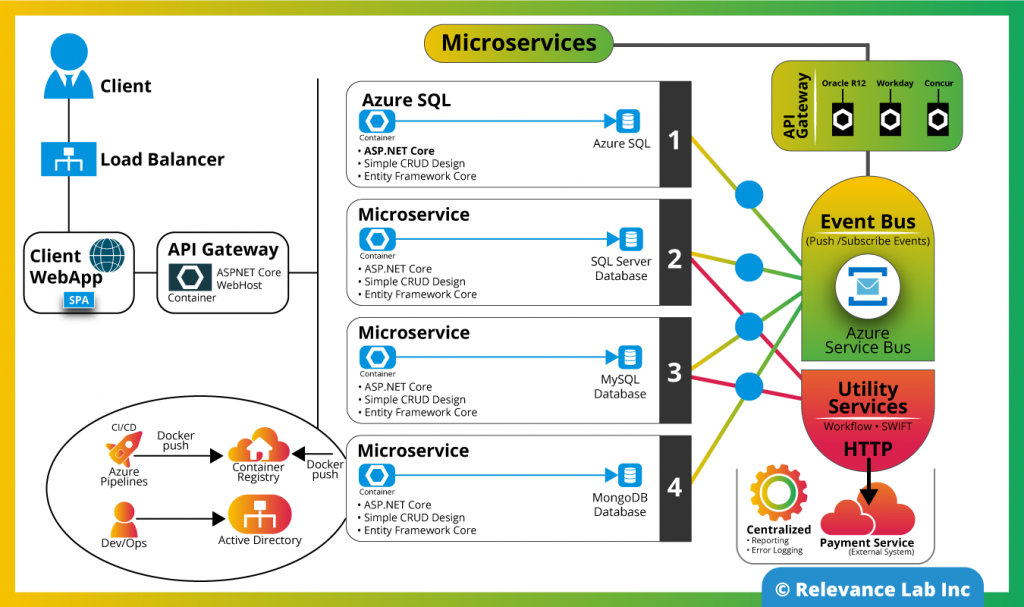

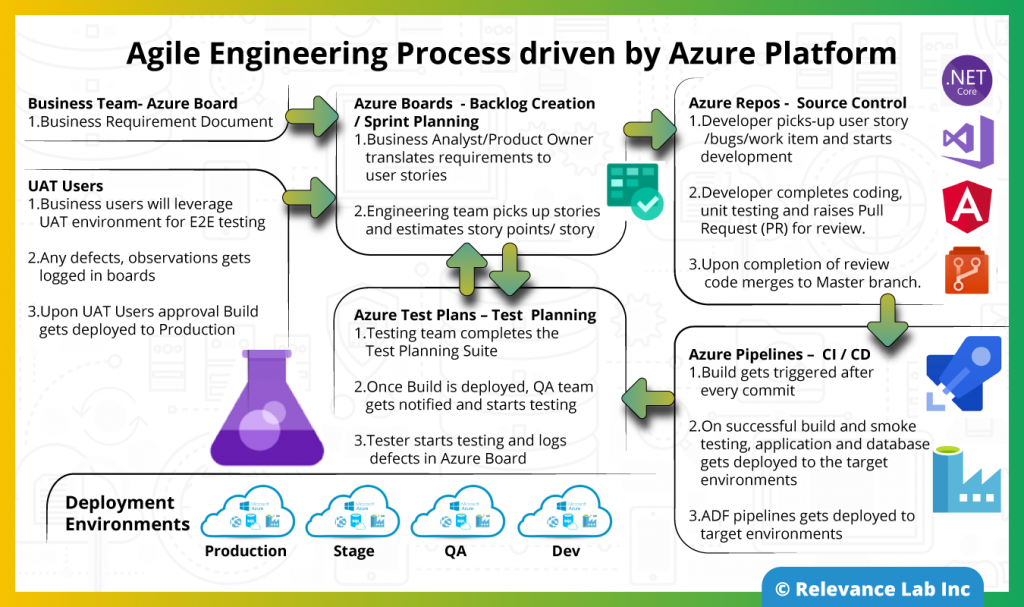

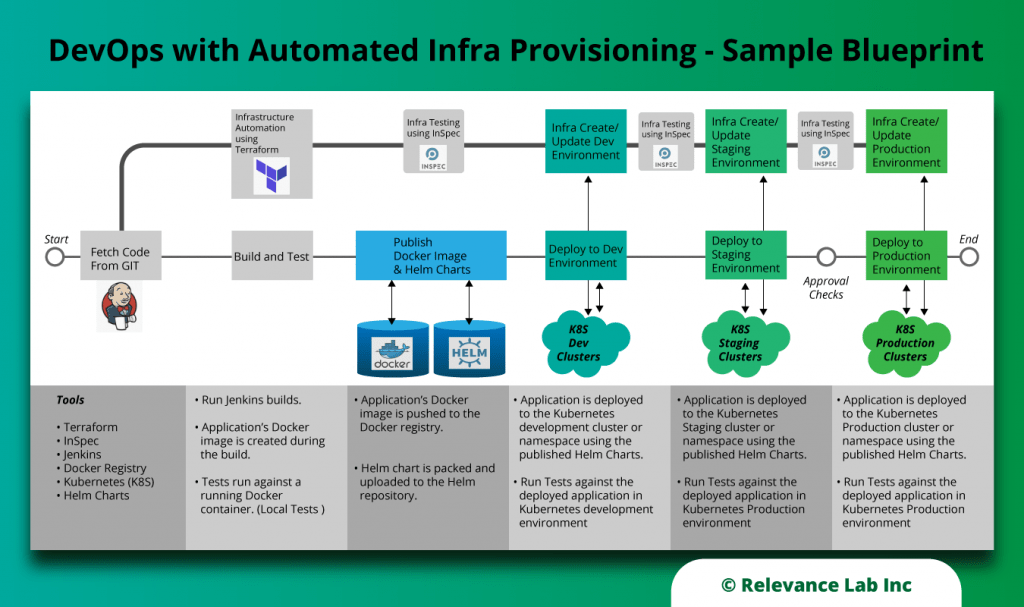

DevOps and Microservices

DevOps and microservices with containers are a key part of all modern architecture for scalability, re-use, and cost-effectiveness. RL, as a DevOps specialist, has been working on re-architecting applications and cloud migration across different segments covering education, pharma & life sciences, insurance, and ISVs. The adoption of containers is a key building block for driving faster product deliveries leveraging Continuous Integration and Continuous Delivery (CI/CD) models. Some of the key considerations followed by our teams cover the following for CI/CD with Containers and Kubernetes:

- Role-based deployments

- Explicit declarations

- Environment dependent attributes for better configuration management

- Order of execution and well-defined structure

- Application blueprints

- Repeatable and re-usable resources and components

- Self contained artifacts for easy portability

The following diagram shows a standard blueprint we follow for DevOps:

For the DevOps & Microservices theme, RL has Solutions like CI/CD Cockpit solution, Cloud orchestration Portal, ServiceNow/AWS/Azure DevOps, AWS/Azure EKS. Similarly, we have methodologies like WOW DevOps, DevOps-driven Engineering, DevOps-driven Operations, and finally Product & Platform offerings like RL BOTs Connector.

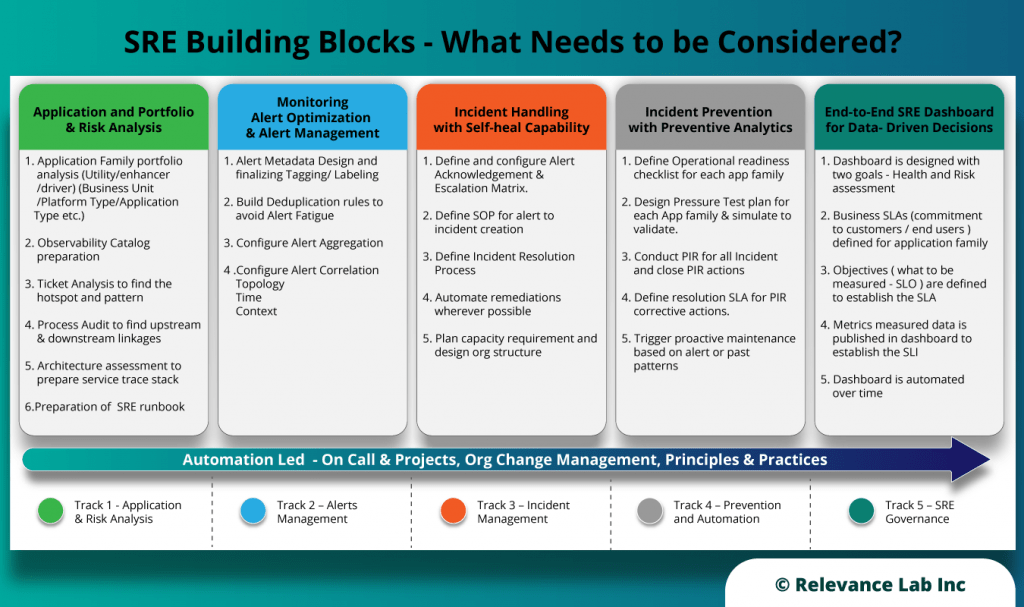

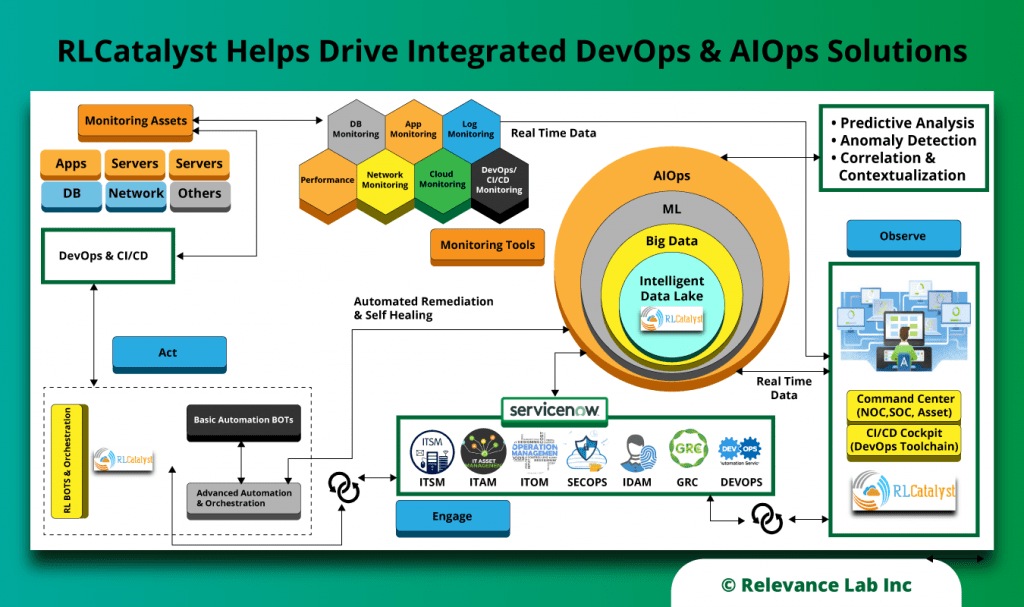

AIOps and Service Delivery

RL brings in unique strengths across AIOps with IT Service Delivery Management on platforms like ServiceNow, Jira ServiceDesk and FreshService. By leveraging a platform-based approach that combines intelligent monitoring, service delivery management, and automation, we offer a mature architecture for achieving AIOps in a prescriptive manner with a combination of technology, tools, and methodologies. Customers have been able to deploy our AIOps solutions in 3 months and benefit from achieving 70% automation of inbound requests, reduction of noise on proactive monitoring by 80%, 3x faster fulfillment of Tickets & SLAs with a shift to a proactive DevOps-led organization structure.

For the AIOps & Service Delivery theme, RL has Solutions like AIOps Blueprint, ServiceNow++, End to End Automated Patch Management, Asset Management NOC & ServiceDesk. Similarly, we have methodologies like ServiceOne and finally Product & Platform offerings like ServiceOne with ServiceNow, ServiceOne with FreshService, RLCommand Center.

Summary

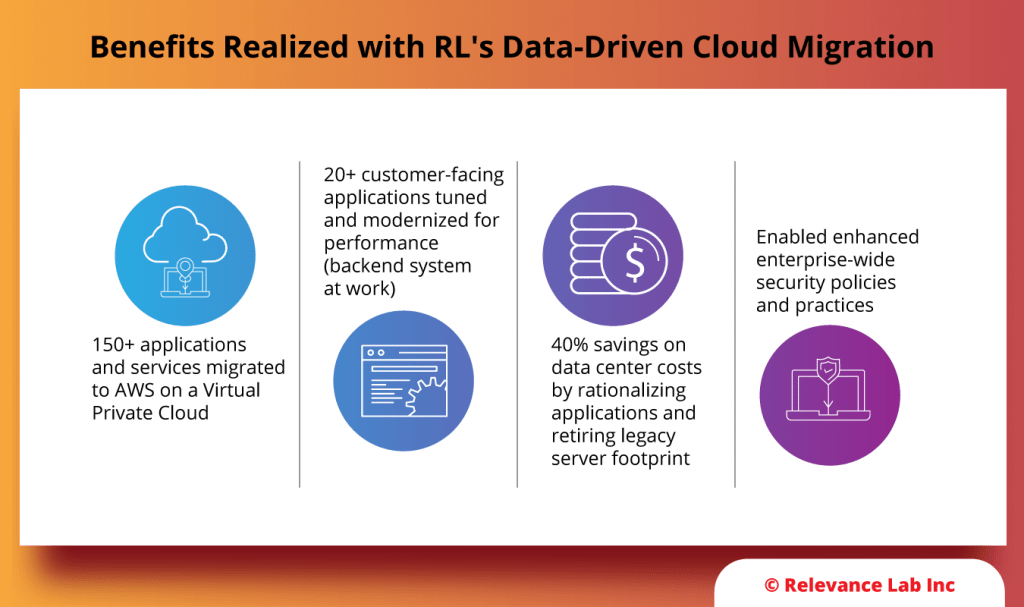

RL offers a combination of Solutions, Methodologies, and Product & Platform offerings covering the 360 spectrum of an enterprise Cloud & DevOps adoption across 4 different tracks covering Cloud Management, Automation, DevOps, and AIOps.

The benefits of a technology-driven approach that leverages an “Automation-First” model has helped our customer reduce their IT spends by 30% over a period of 3 years with 3x faster product deliveries and real-time security & compliance.

To know more about our Cloud Centre of Excellence and how we can help you adopt Cloud “The Right Way” with best practices leveraging Cloud Management, Automation, DevOps, and AIOps, feel free to write to marketing@relevancelab.com

Reference Links

Considerations for AWS AMI Factory Design