Introduction

Research IT teams have in recent years adopted self-service portals to reduce manual dependence on IT teams and reduce friction. However even the best portals still expect scientists to think like system than people. Copilot flip this paradigm: instead of navigating menus and forms, researchers can simply describe what they need in plain English and let the system handle the complexity behind the scenes. The promise is powerful — but only if copilot is applied where it truly excel.

The real value of an AI copilot lies in its ability to translate natural-language intent into structured actions, search internal knowledge bases, and recommend next steps based on context — a capability traditional portals never offered. However, not every problem is ready for conversational automation. Tasks that require precise cost aggregation, deep log correlation, or complex real-time diagnostics still depend on deterministic systems and curated pipelines.

This guide explores how copilot can radically simplify research computing, highlights which use cases are ready for automation today, and offers a practical framework for combining conversational AI with proven infrastructure tools — so scientists can focus on science, not servers.

Where AI Copilots Excel in Research IT

Natural Language Frontend for Self-Service

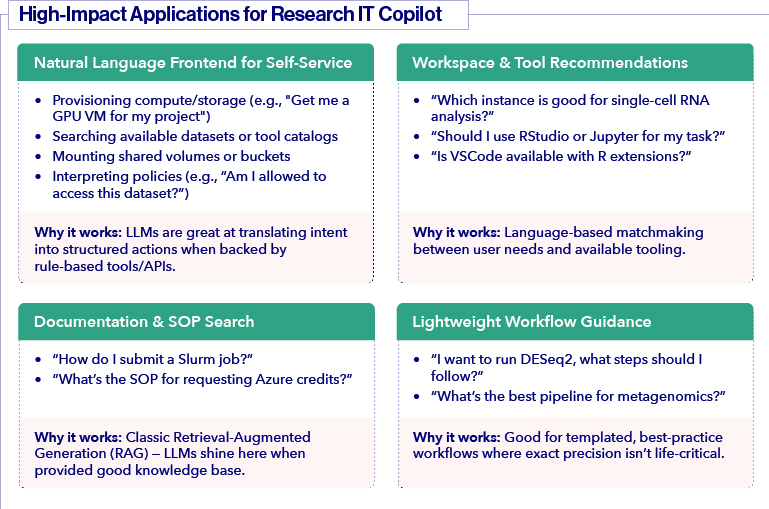

The most immediate value comes from eliminating the learning curve for common IT requests. Instead of requiring users to navigate complex portals or remember specific commands, copilot can interpret natural language requests and translate them into structured actions.

Effective applications include:

- Provisioning compute and storage resources ("Get me a GPU VM for my project")

- Searching available datasets or tool catalogs

- Mounting shared volumes or cloud storage buckets

- Interpreting access policies ("Am I allowed to access this dataset?")

This works because LLMs excel at understanding user intent when backed by well-defined APIs and rule-based systems. The copilot handles the interface complexity while backend systems execute the actual provisioning.

Workspace and Tool Recommendations

Research IT environments typically offer dozens of tools and instance types. Copilot can act as intelligent matchmakers, connecting user needs with appropriate resources based on natural language descriptions.

Common scenarios include:

- Instance selection ("Which instance is good for single-cell RNA analysis?")

- Tool recommendations ("Should I use RStudio or Jupyter for my task?")

- Software availability ("Is VSCode available with R extensions?")

The value here lies in democratizing expertise. New researchers can access the same tool selection guidance that would typically require consultation with experienced IT staff.

Documentation and Knowledge Base Search

Traditional documentation searches often fail when users don't know the exact terminology. CoPilots can understand context and intent, making institutional knowledge more accessible through conversational interfaces.

High-impact use cases:

- Procedural guidance ("How do I submit a Slurm job?")

- Policy interpretation ("What's the SOP for requesting Azure credits?")

- Troubleshooting common issues

This represents a classic Retrieval-Augmented Generation (RAG) application where LLMs truly shine. When provided with a well-structured knowledge base, they can surface relevant information based on conversational queries.

Lightweight Workflow Guidance

For established research workflows, copilot can provide step-by-step guidance and best practice recommendations. This is particularly valuable for onboarding new team members or standardizing approaches across projects.

Effective applications:

- Analysis pipeline recommendations ("I want to run DESeq2, what steps should I follow?")

- Methodology guidance ("What's the best pipeline for metagenomics?")

- Configuration templates and examples

The key limitation here is that workflows must be well-documented and relatively standardized. Custom or experimental approaches still require human expertise.

Areas Requiring Caution

AWS Cost Analysis and Optimization

Cost management involves complex data relationships, precise financial calculations, and multi-dimensional analysis—areas where LLMs still struggle with accuracy and reliability.

Challenging aspects include:

- Parsing Cost and Usage Reports (CUR)

- Accurate project and user chargeback calculations

- Savings Plans and Reserved Instance optimization

- Multi-dimensional cost attribution across services

However, copilot can add value when working with pre-processed data. They can interpret summary reports, highlight usage anomalies, and query pre-aggregated tables in Athena or Redshift. The key is ensuring another system handles the complex calculations.

Error Debugging and Log Analysis

While LLMs can help interpret individual error messages, comprehensive debugging requires correlation across multiple systems, time periods, and log sources—capabilities that current models lack.

Limitations include:

- Real-time log correlation across services

- Root cause analysis for complex system failures

- Accurate stack trace interpretation

- Multi-service error propagation mapping

CoPilot work better as debugging assistants rather than autonomous problem solvers. They can summarize specific log excerpts, match known error patterns to solutions, and help humans prioritize investigation efforts.

Live System State Monitoring

Real-time monitoring requires processing constantly changing data streams, detecting patterns in noisy telemetry, and making time-sensitive decisions—tasks that exceed current LLM capabilities.

Challenging areas:

- Memory, CPU, and GPU utilization analysis

- Active job status correlation

- Stuck state detection across distributed systems

- Performance anomaly identification

Instead, copilot can interpret monitoring summaries, explain metric meanings, and recommend investigation steps based on pre-analyzed data.

Strategy for Research IT Copilot Success

To maximize the potential of the Research IT copilot, a targeted strategy is essential. Here’s how success can be achieved:

Key Focus Areas: Capabilities to Enhance Efficiency

Turn Intentions Into Action

The Research IT copilot simplifies complex tasks, transforming user intent into tangible actions with ease. Examples include provisioning virtual machines, mounting data, and efficiently managing access requests.

Deliver Precise Documentation Answers

Users can quickly access clear answers to their questions about critical documentation. This includes insights into standard operating procedures (SOPs), security policies, and data access protocols, ensuring compliance and clarity.

Provide Tailored Recommendations

The copilot offers expert suggestions for tools and pipelines tailored to diverse use cases. This helps users identify the most effective solutions to meet their specific research or operational needs.

Assist With Template Creation

Streamline workflows by leveraging the copilot to generate templates for job scripts, Slurm submission files, and metadata. This ensures consistency and reduces the time spent on manual setup.

Points for Caution

- Cost Aggregation: Ensure accuracy when handling complex joins and dependencies related to tagging.

- Live Error Tracing: Dependable, structured logs and clear causal links are essential for accurate execution.

- Multi-System Correlation: Keep efforts within the current context and reasoning capacity to avoid overextending.

- Security and Safety Decisions: Exercise additional care to prevent inaccuracies or assumptions that could lead to unsafe outcomes.

Building Effective Hybrid Solutions

The most successful implementations combine LLM strengths with traditional system capabilities. This hybrid approach maximizes user experience while maintaining reliability and accuracy.

LLM Responsibilities

- User interface and natural language processing

- Intent interpretation and context understanding

- Workflow navigation and guidance

- Result explanation and recommendations

Backend System Responsibilities

- Complex data queries and calculations

- System state monitoring and analysis

- Provisioning execution and validation

- Security enforcement and audit logging

Implementation Framework

Phase 1: Start with High-Success Areas

Focus initial implementations on documentation search, tool recommendations, and simple provisioning workflows. These provide immediate value while building organizational confidence.

Phase 2: Add Structured Integrations

Develop APIs that allow CoPilots to trigger backend processes while maintaining proper validation and error handling. This enables more complex workflows without sacrificing reliability.

Phase 3: Expand with Guardrails

Gradually extend capabilities to areas requiring more precision, but implement strong validation, human oversight, and rollback mechanisms.

Implementation Best Practices

Design for Intent, Not Automation

Copilot should focus on understanding what users want to accomplish rather than fully automating complex processes. This approach reduces risk while maximizing utility.

Implement Progressive Disclosure

Start with simple capabilities and gradually expose more advanced features as users build confidence and understanding.

Maintain Human Oversight

Critical decisions should always involve human review, especially for security, compliance, or financial implications.

Build Feedback Loops

Implement mechanisms to capture when copilot recommendations succeed or fail, enabling continuous improvement of the underlying knowledge base and logic.

Your Path Forward

AI copilots represent a significant opportunity to improve Research IT service delivery, but success requires strategic focus on proven use cases. Start with natural language interfaces for self-service provisioning, documentation search, and tool recommendations—areas where LLMs demonstrate clear advantages.

Avoid early implementations in cost analysis, complex debugging, or real-time monitoring without proper backend systems to handle the heavy lifting. These areas require structured approaches that complement rather than replace traditional tools.

The most effective copilot implementations will feel like having an expert assistant who understands your environment, can quickly find relevant information, and guides users through standard processes—while knowing when to escalate complex issues to human experts.

Consider beginning with a pilot focused on documentation search and simple provisioning workflows. This approach provides immediate value while building the foundational integrations needed for more advanced capabilities.