2020 Blog, Blog, Featured

Many organizations include the assessment of fraud risk as a component of the overall SOX risk assessment and Compliance plays a vital role.

The word SOX comes from the names of Senator Paul Sarbanes and Representative Michael G. Oxley who wrote this bill in response to several high-profile corporate scandals like Enron, WorldCom, and Tyco in United Station. The United States Congress passed the Sarbanes Oxley Act in 2002 and established rules to protect the public from the corporates, doing any fraudulent or following invalid practices. Primary objective was to increase transparency in the financial reporting by corporations and initialize a formalized system of checks and balances. Implementing SOX security controls help to protect the company from data theft by insider threat and cyberattack. SOX act is applicable to all publicly traded companies in the United States as well as wholly owned subsidiaries and foreign companies that are publicly traded.

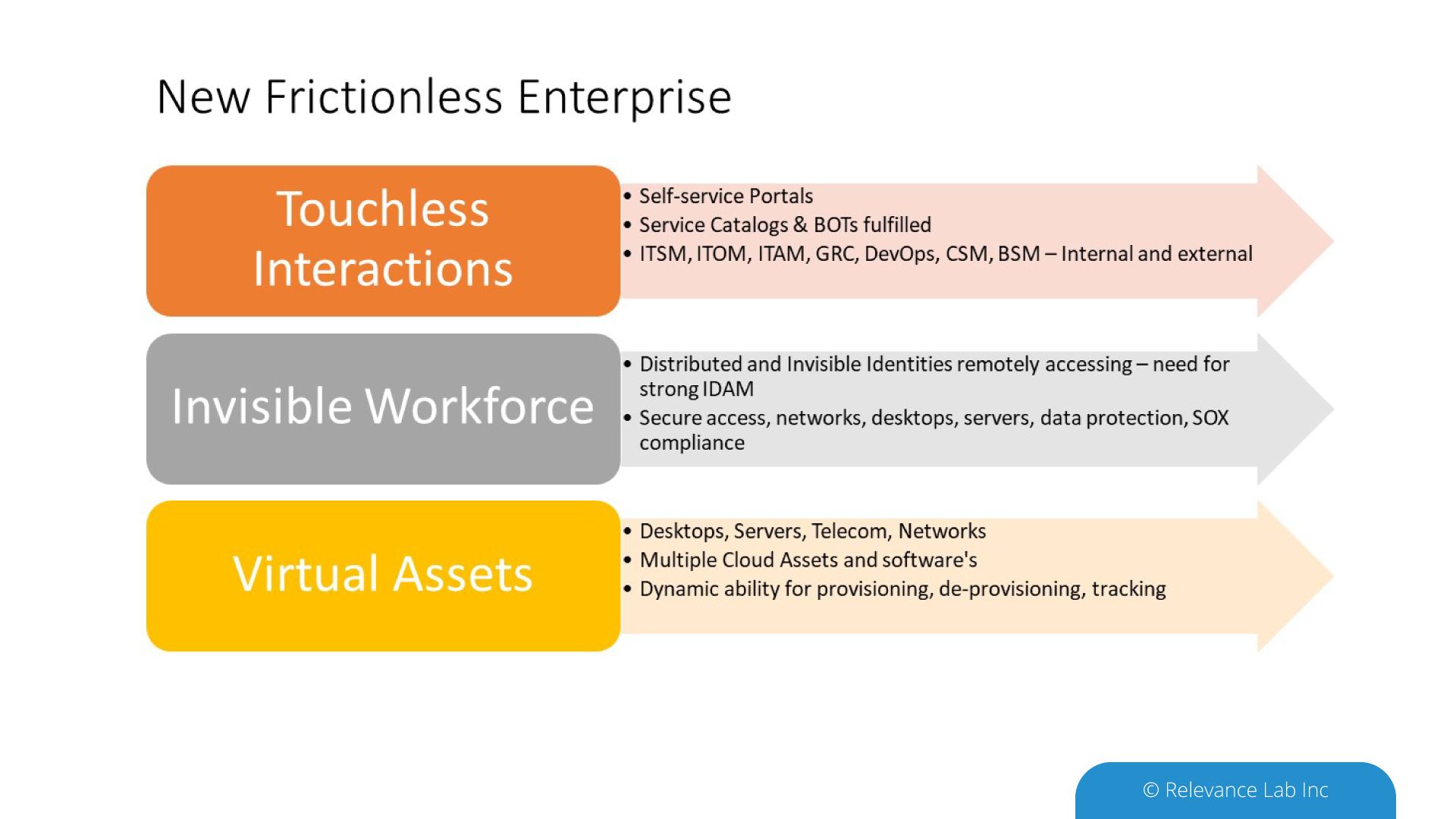

Compliance is essential for an organization to avoid malpractices in their day to day business operations, during these unprecedented times of challenge change such as what we are experiencing today. There are a lot of changes in the way we do business during the times of COVID. Workplaces have been replaced by home offices due to which there are challenges to enforce Compliance resulting in an increased risk of fraud.

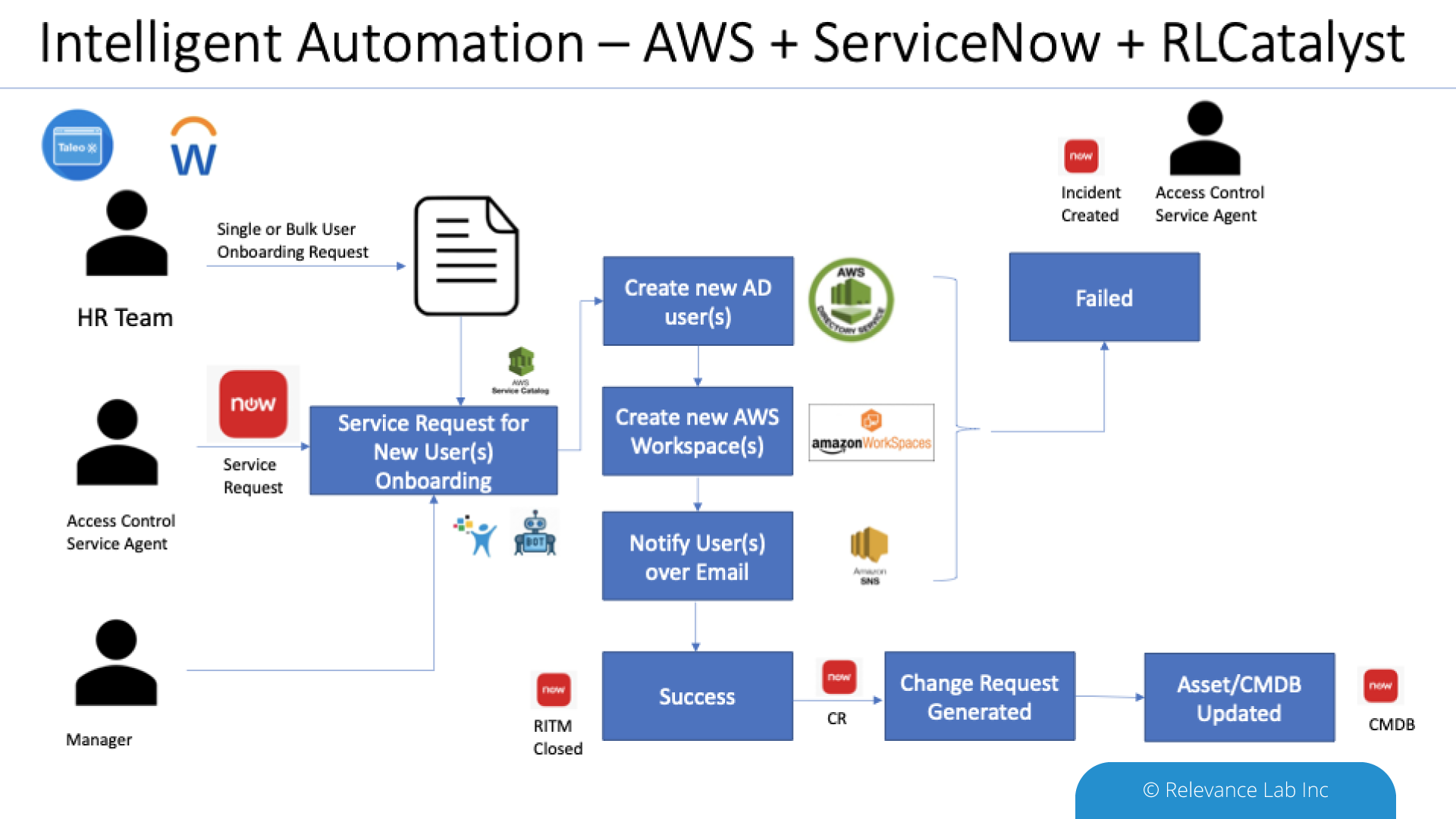

Given the current COVID-19 situation while many employees are working from home or remote areas, there is an increased challenge of managing resources or time. Being relevant to the topic, on user provisioning, there are risks like, identification of unauthorized access to the system for individual users based on the roles or responsibility.

Most organization follow a defined process in user provisioning like, sending a user access request with relevant details including:

- User name

- User Type

- Application

- Roles

- Obtaining line manager approval

- Application owner approval

And so on, based on the policy requirement and finally the IT providing an access grant. Several organizations have been still following a manual process, thereby causing a security risk.

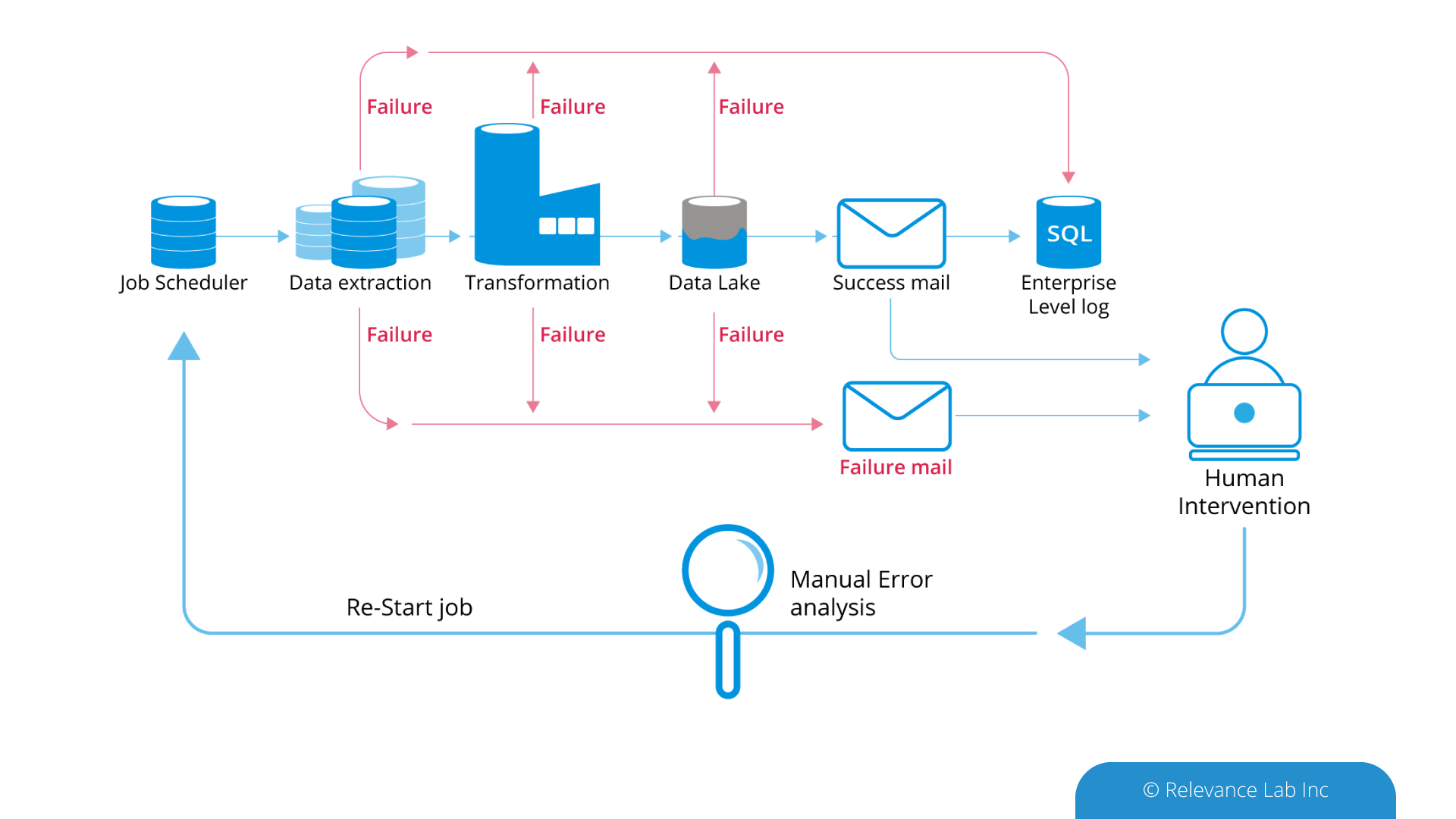

The traditional way of processing a user provisioning request, especially during the time of COVID-19 has become complicated. This is due to shortage of resources or lack of resource availability to resolve a task. Various reasons are:

- Different time zone

- No back-up resources

- Change in business plan

- Change in priority request

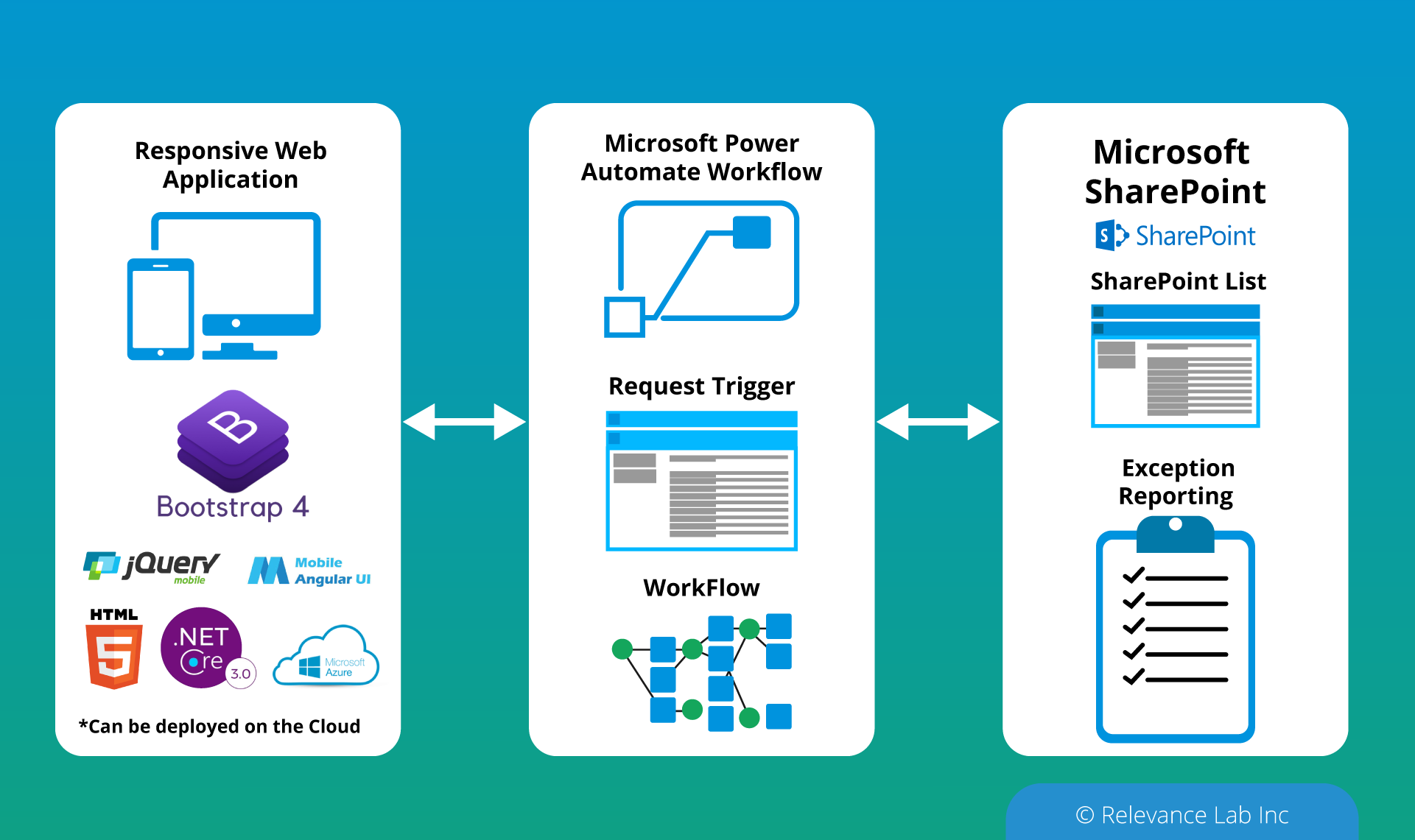

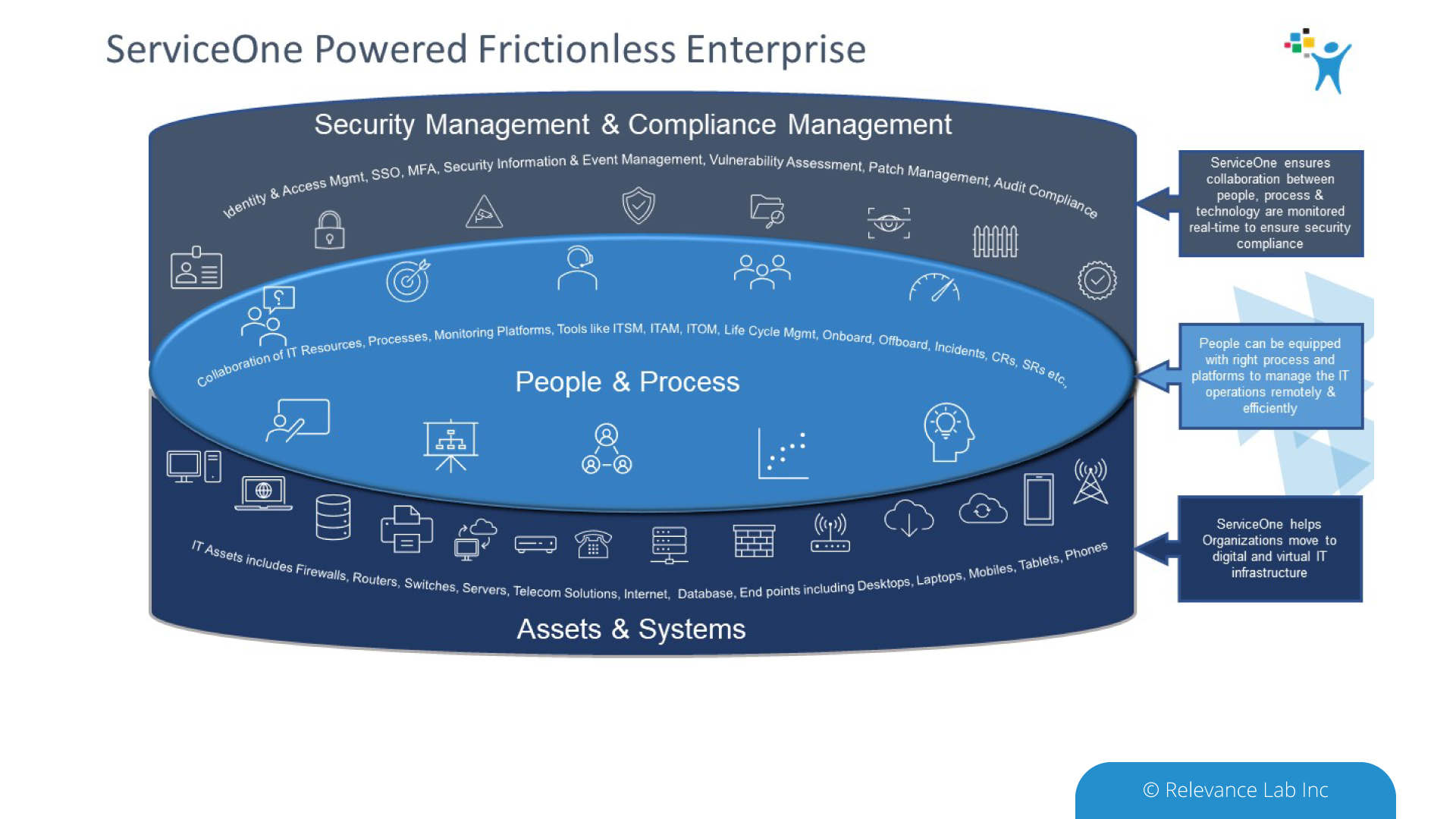

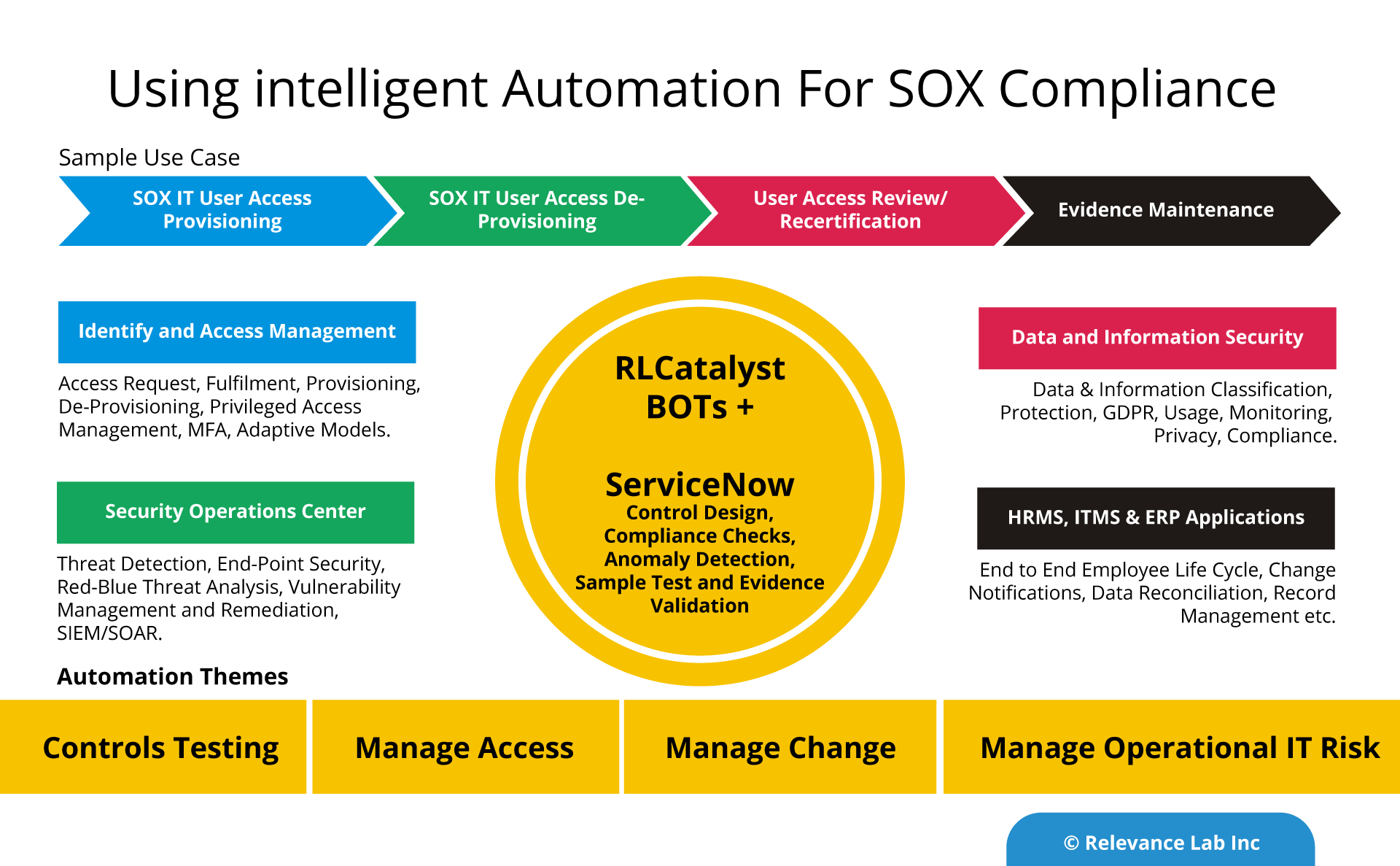

In such a situation automation plays an important role. Automation has helped in reduction of manual work, labor cost, dependency/ reliance of resource and time management. An automation process built with proper design, tools, and security reduces the risk of material misstatement, unauthorized access, fraudulent activity, and time management. Usage of ServiceNow has also helped in tracking and archiving of evidence (evidence repository) essential for Compliance. Effective Compliance results in better business performance.

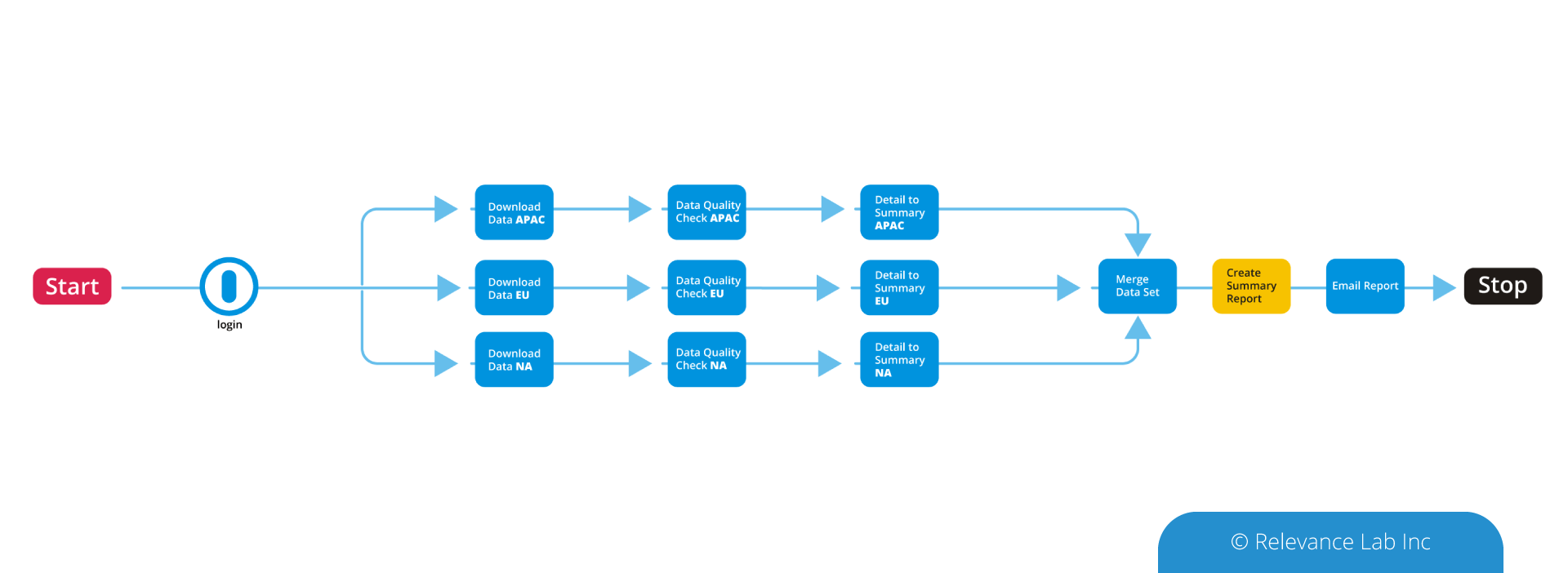

Intelligent Automation for SOX Compliance can bring in significant benefits like agility, better quality and proactive Compliance. The below table provides further details on the IT general controls.

Example – User Access Management

| Risk | Control | Manual | Automation |

|---|---|---|---|

| Unauthorized users are granted access to applicable logical access layers. Key financial data / programs are intentionally or unintentionally modified. | New and modified user access to the software is approved by authorized approval as per the company IT policy. All access is appropriately provisioned. | Access to the system is provided manually by IT team based on the approval given as per the IT policy and roles and responsibility requested.

SOD (Segregation Of Duties) check is performed manually by Process Owner/ Application owners as per the IT Policy. |

Access to the system is provided automatically by use of auto-provisioning script designed as per the company IT policy.

BOT checks for SOD role conflict and provides the information to the Process Owner/Application owners as per the policy. Once the approver rejects the approval request, no access is provided by BOT to the user in the system and audit logs are maintained for Compliance purpose. |

| Unauthorized users are granted privileged rights. Key financial data/programs are intentionally or unintentionally modified. | Privileged access, including administrator accounts and superuser accounts, are appropriately restricted from accessing the software. | Access to the system is provided manually by the IT team based on the given approval as per the IT policy.

Manual validation check and approval to be provided by Process Owner/ Application owners on restricted access to the system as per IT company policy. |

Access to the system is provided automatically by use of auto-provisioning script designed as per the company IT policy.

Once the approver rejects the approval request, no access is provided by BOT to the user in the system and audit logs are maintained for Compliance purpose. BOT can limit the count and time restriction of access to the system based on the configuration. |

| Unauthorized users are granted access to applicable logical access layers. Key financial data/programs are intentionally or unintentionally modified. | Access requests to the application are properly reviewed and authorized by management | User Access reports need to be extracted manually for access review by use of tools or help of IT.

Review comments need to be provided to IT for de-provisioning of access. |

BOT can help the reviewer to extract the system generated report on the user.

BOT can help to compare active user listing with HR termination listing to identify terminated user. BOT can be configured to de-provision access of user identified in the review report on unauthorized access. |

| Unauthorized users are granted access to applicable logical access layers if not timely removed. | Terminated application user access rights are removed on a timely basis. | System access is de-activated manually by IT team based on the approval provided as per the IT policy. |

System access can be deactivated by use of auto-provisioning script designed as per the company IT policy.

BOT can be configured to check the termination date of the user and de-active system access if SSO is enabled. BOT can be configured to deactivate user access to the system based on approval. |

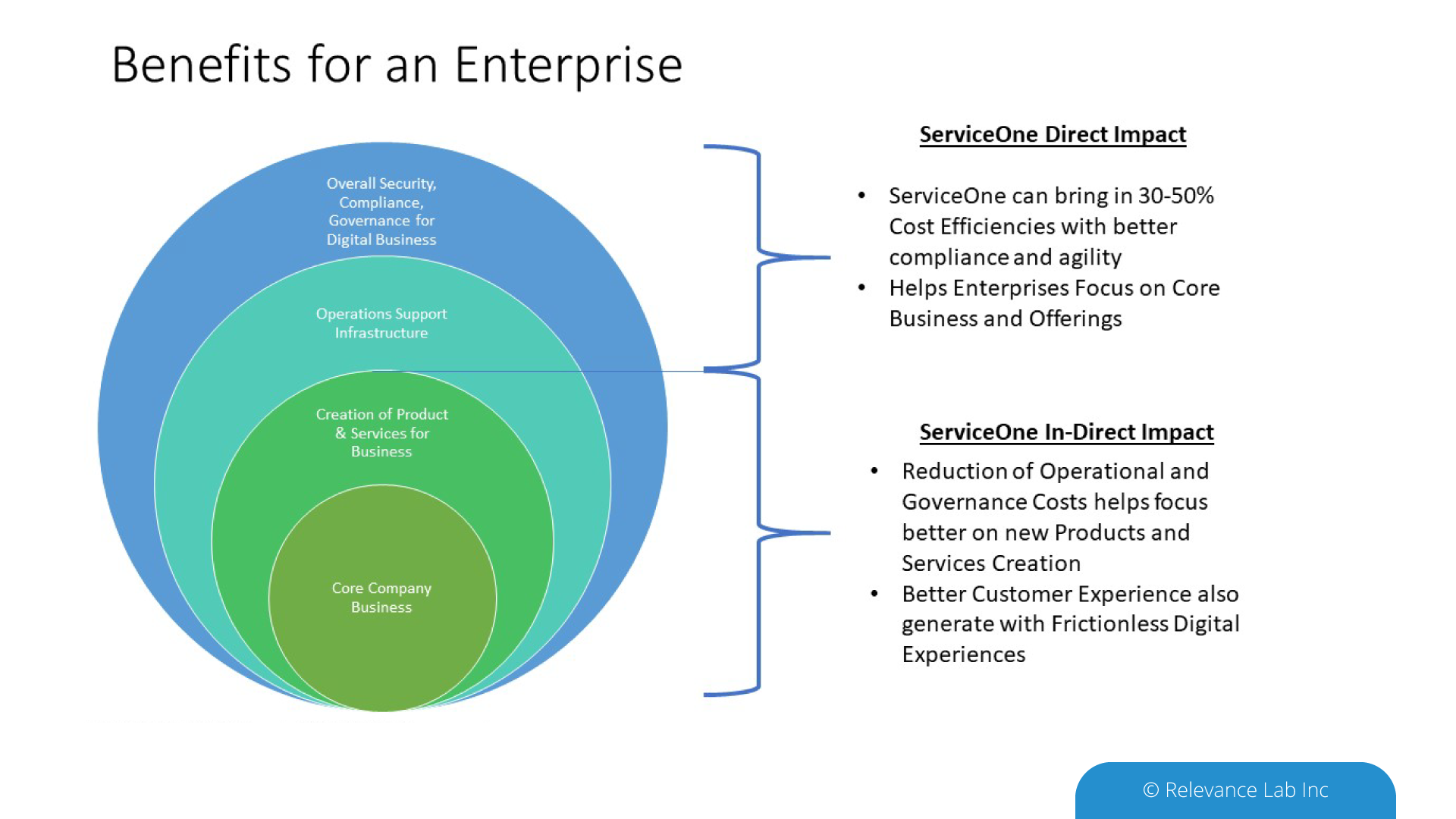

The table provides a detailed comparison of the manual and automated approach. Automation can bring in 40-50% cost, reliability, and efficiency gains. The maturity model requires a three step process of standardization, tools adoption and process automation.

For more details or enquires, please write to marketing@relevancelab.com