2021 Blog, Blog, Featured

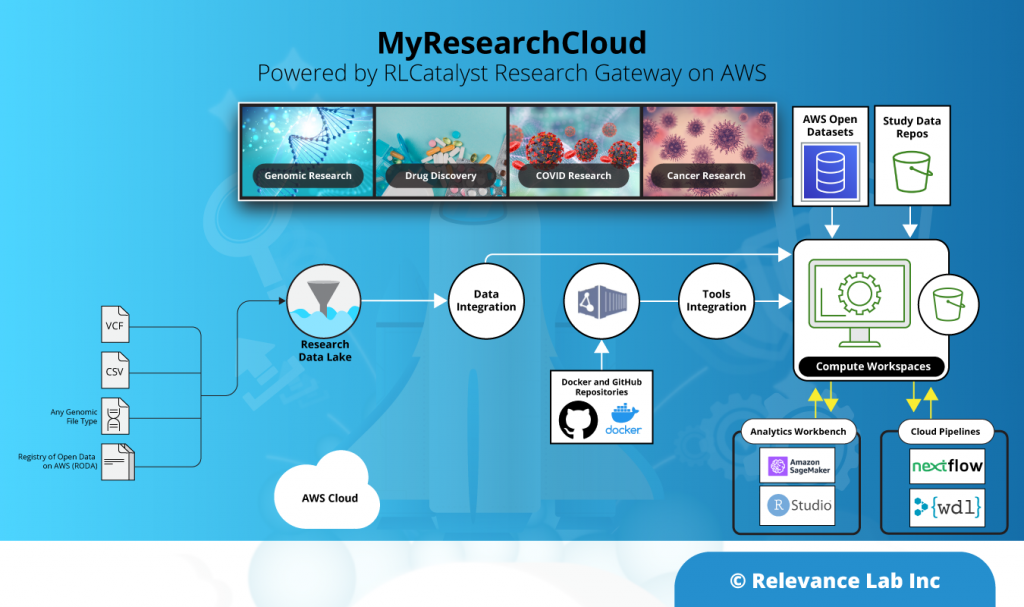

We aim to enable the next-generation cloud-based platform for collaborative research on AWS with access to research tools, data sets, processing pipelines, and analytics workbench in a frictionless manner. It takes less than 30 minutes to launch a “MyResearchCloud” working environment for Principal Investigators and Researchers with security, scalability, and cost governance. Using the Software as a Service (SaaS) model is a preferable option for Scientific research in the cloud with tight control on data security, privacy, and regulatory compliances.

Typical top-5 use cases we have found for MyResearchCloud as a suitable solution for unlocking your Scientific Research needs:

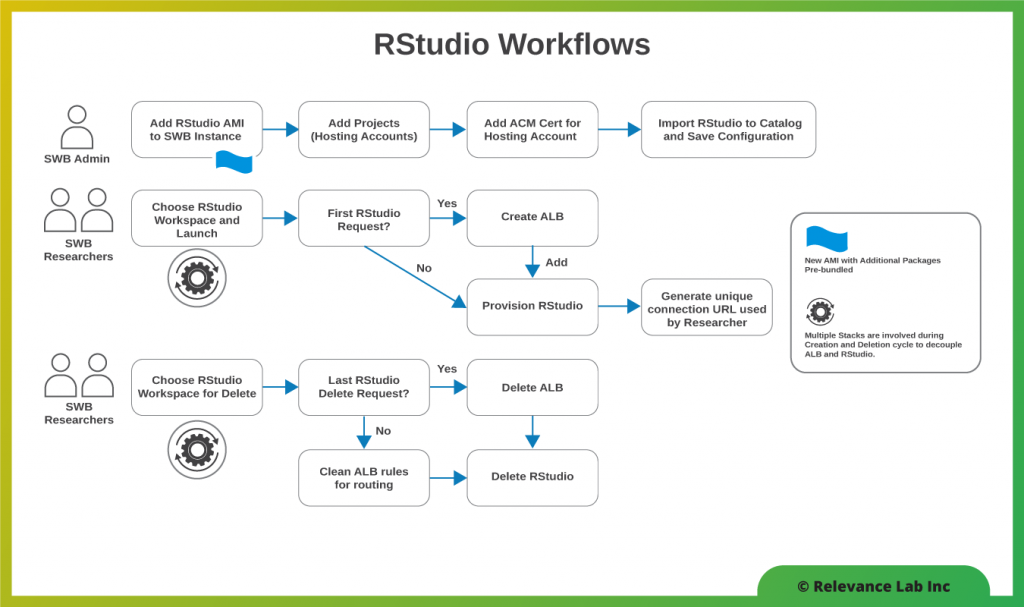

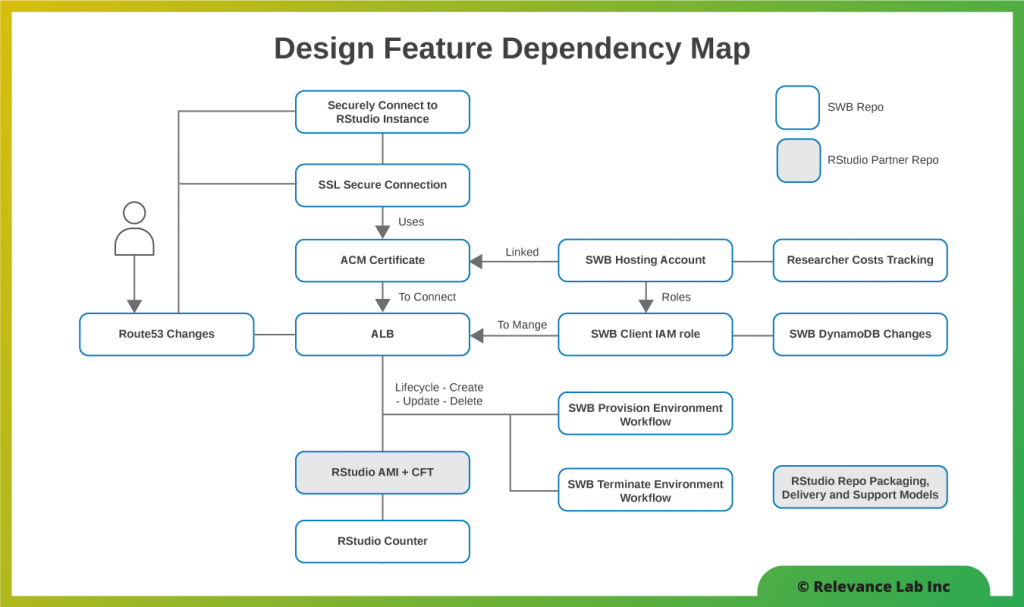

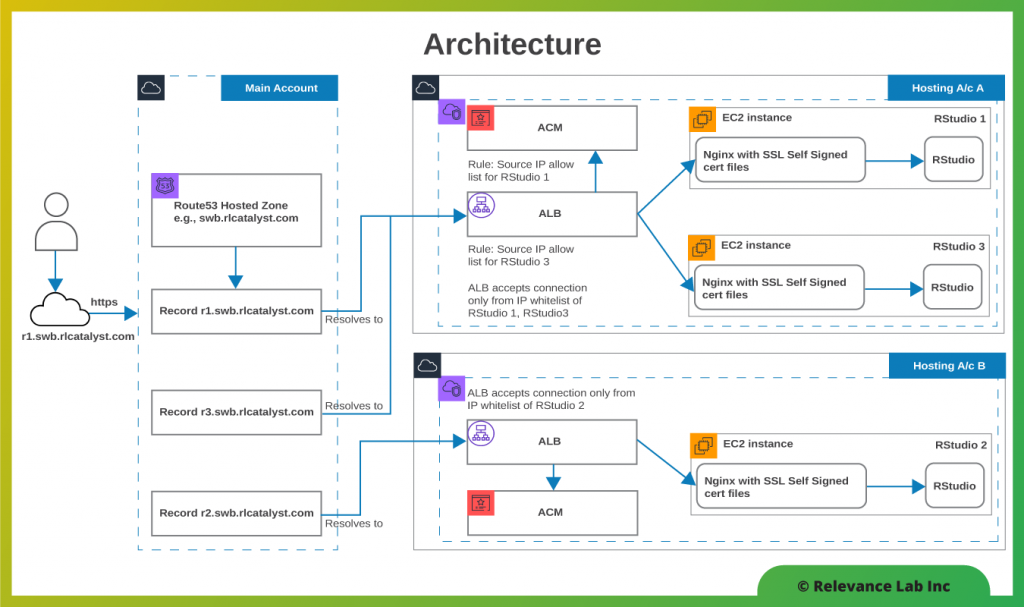

- Need an RStudio solution on AWS Cloud with an ability to connect securely (using SSL) without having to worry about managing custom certificates and their lifecycle

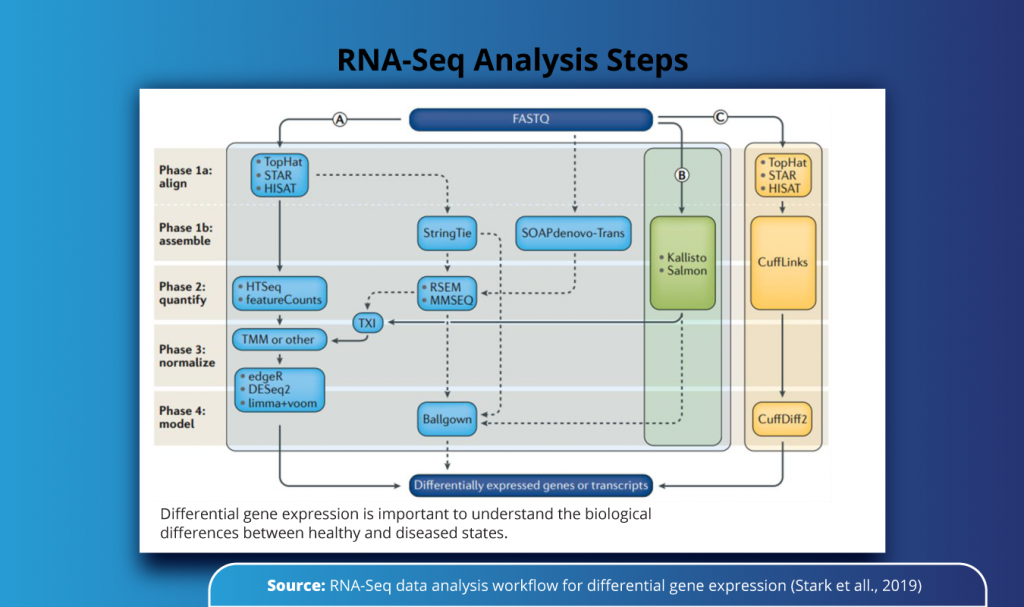

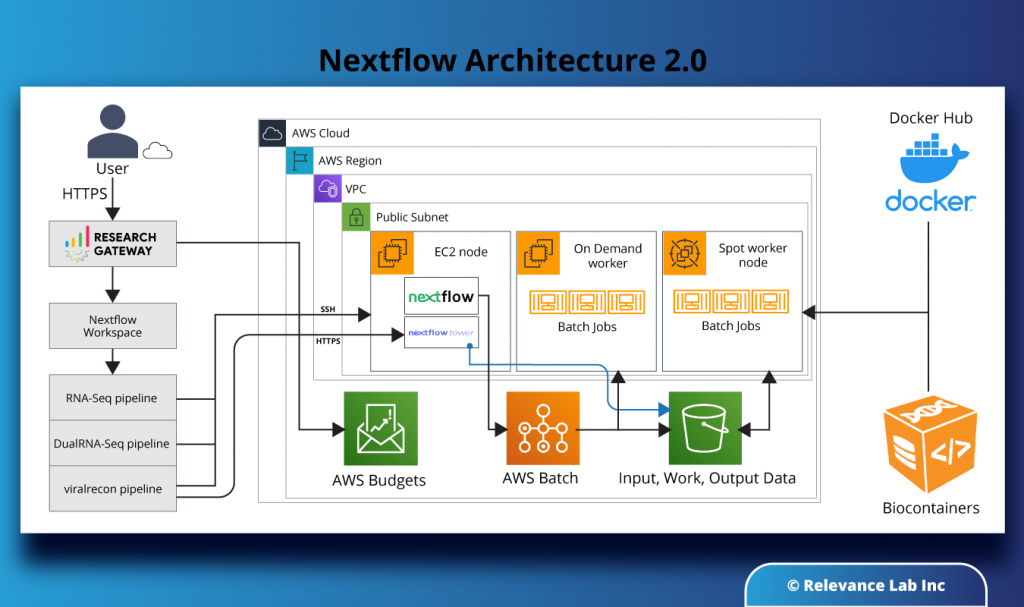

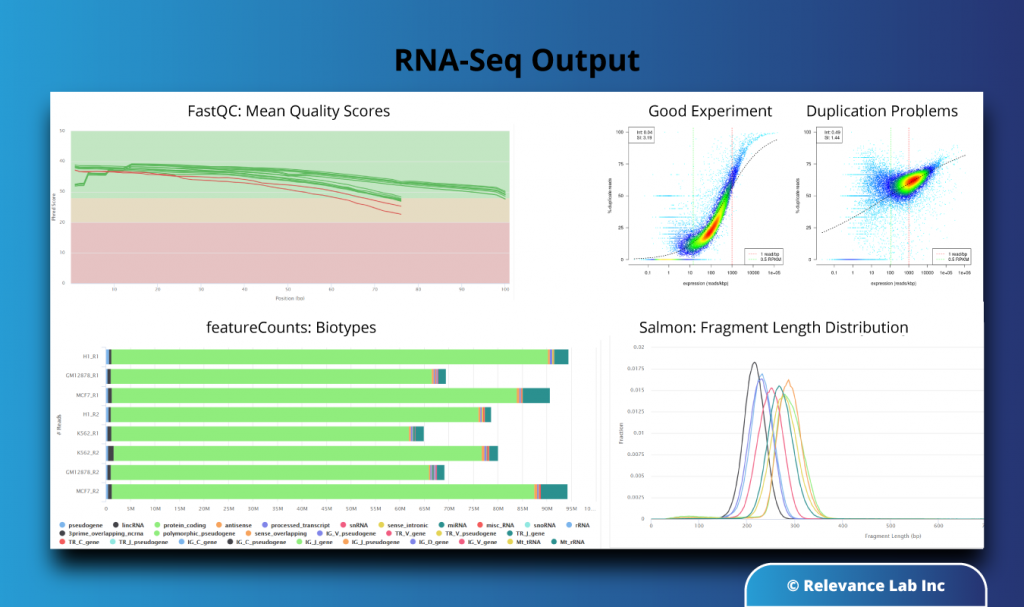

- Genomic pipeline processing using Nextflow and Nextflow Tower (open source) solution integrated with AWS Batch for easy deployment of open source pipelines and associated cost tracking per researcher and per pipeline

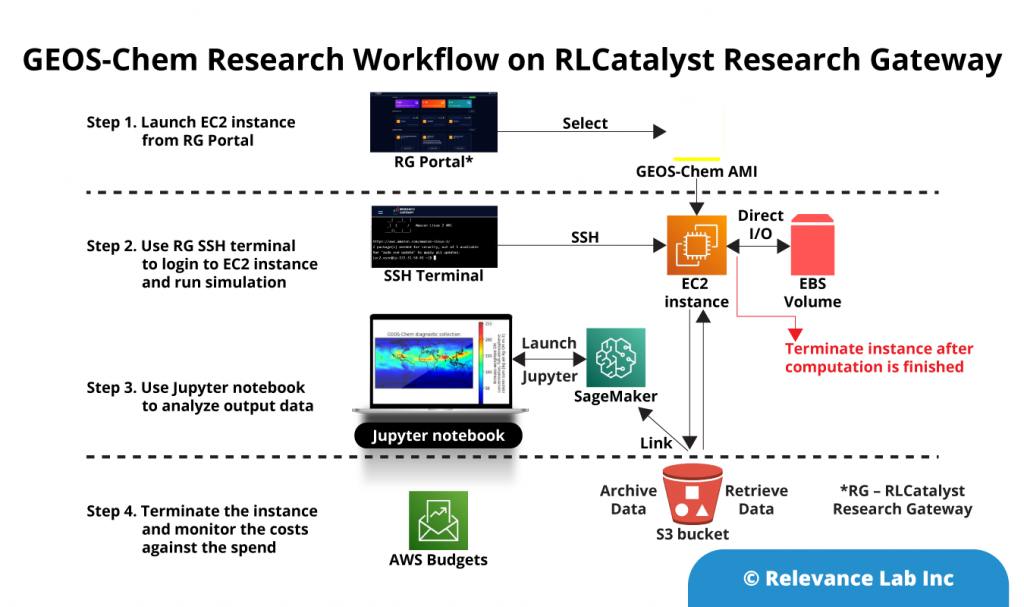

- Enable researchers with EC2 Linux and Windows servers to install their specific research tools and software. Ability to add AMI based researcher tools (both private and from AWS Marketplace) with 1-click on MyResearchCloud

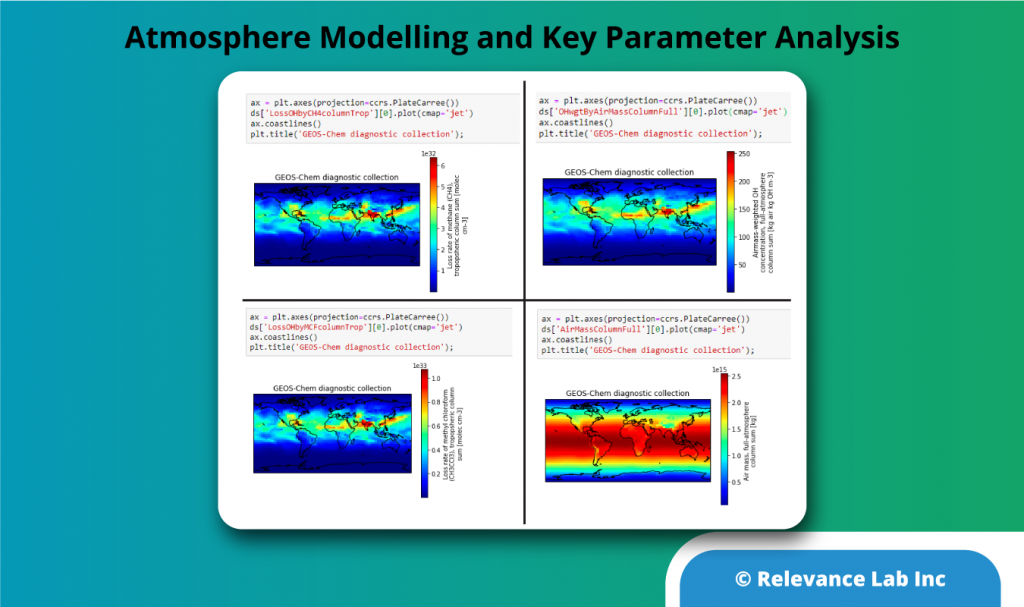

- Using SageMaker AI/ML Workbench drive Data research (like COVID-19 impact analysis) with available public data sets already on AWS cloud and create study-specific data sets

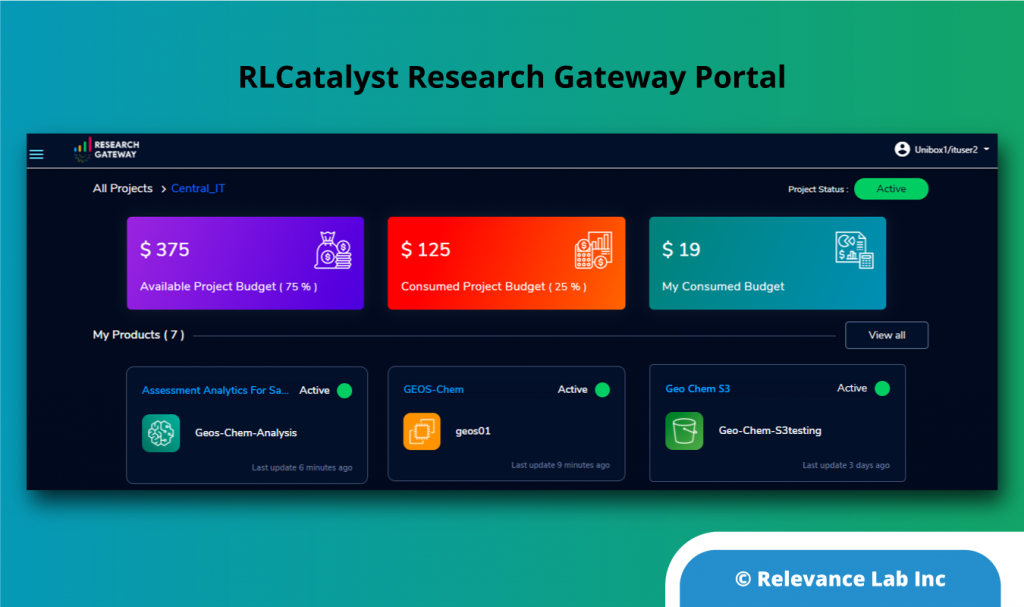

- Enable a small group of Principal Investigator and researchers to manage Research Grant programs with tight budget control, self-service provisioning, and research data sharing

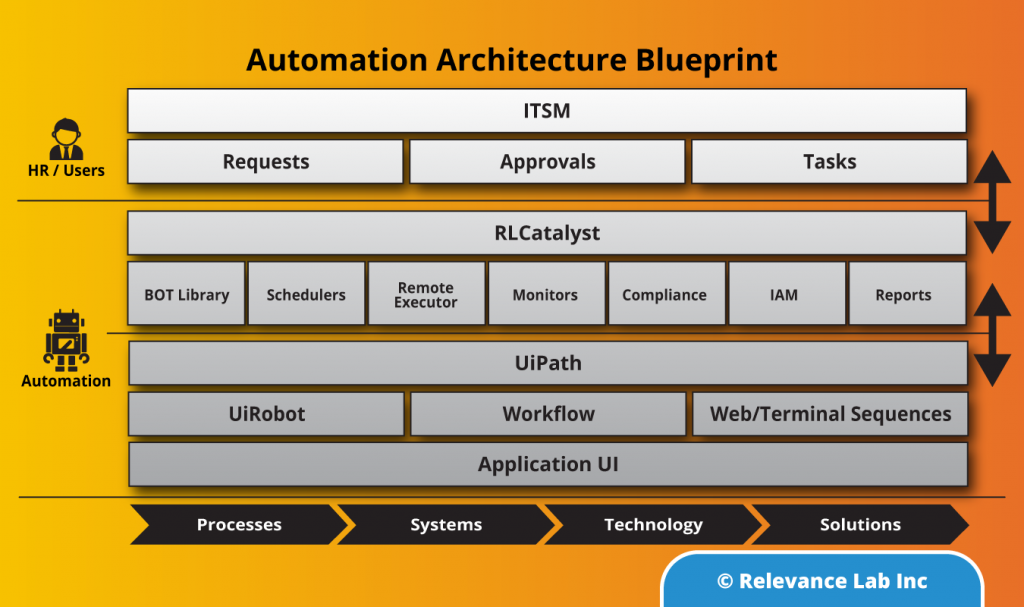

MyResearchCloud is a solution powered by RLCatalyst Research Gateway product and provides the basic environment with access to data, workspaces, analytics workbench, and cloud pipelines, as explained in the figure below.

Currently, it is not easy for research institutes, their IT staff, and a group of principal investigators & researchers to leverage the cloud easily for their scientific research. While there are constraints with on-premise data centers and these institutions have access to cloud accounts, converting a basic account to one with a secured network, secured access, ability to create & publish product/tools catalog, ingress & egress of data, sharing of analysis, tight budget control and other non-trivial tasks divert the attention away from ‘Science’ to ‘Servers’.

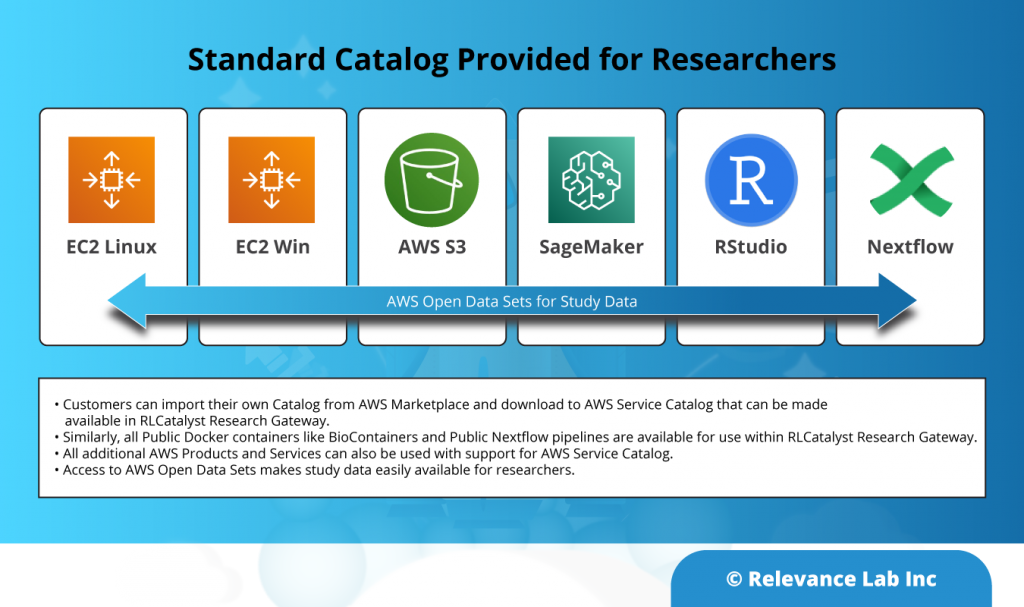

We aim to provide a standard catalog for researchers out-of-the-box solution with an ability to also bring your own catalog, as explained in the figure below.

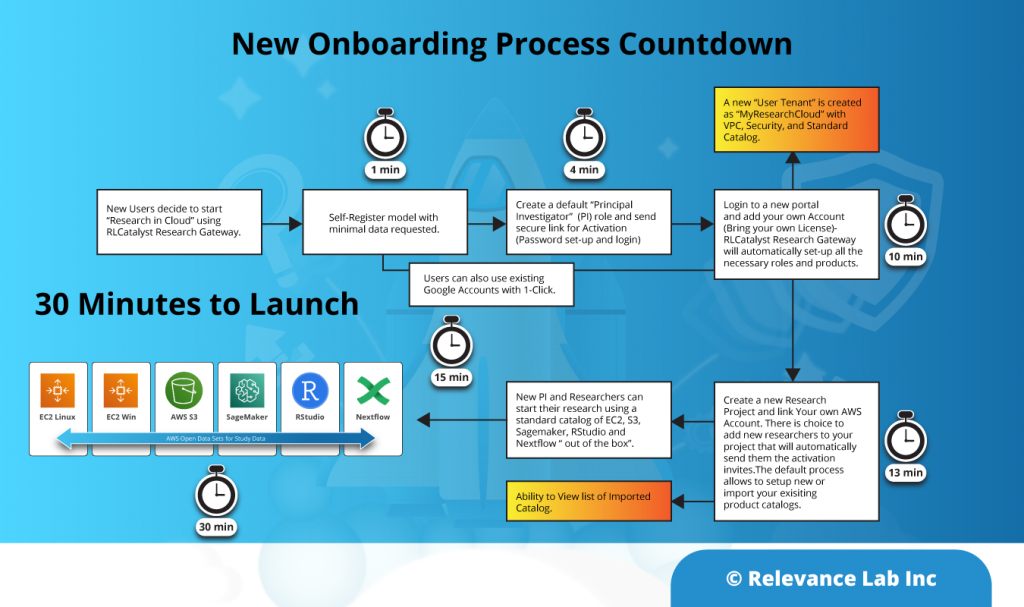

Based on our discussions with research stakeholders, especially small & medium ones, it was clear that the users want something as easy to consume as other consumer-oriented activities like e-shopping, consumer banking, etc. This led to the simplified process of creating a “MyResearchCloud” with the following basic needs:

- This “MyResearchCloud” is more suitable for smaller research institutions with a single or a few groups of Principal Investigators (PI) driving research with few fellow researchers.

- The model to set up, configure, collaborate, and consume needs to be extremely simple and comes with pre-built templates, tools, and utilities.

- PI’s should have full control of their cloud accounts and cost spends with dynamic visibility and smart alerts.

- At any point, if the PI decides to stop using the solution, there should be no loss to productivity and preservation of existing compute & data.

- It should be easy to invite other users to collaborate while still controlling their access and security.

- Users should not be loaded with technical jargon while ordering simple products for day-to-day research using computation servers, data repositories, analysis IDE tools, and Data processing pipelines.

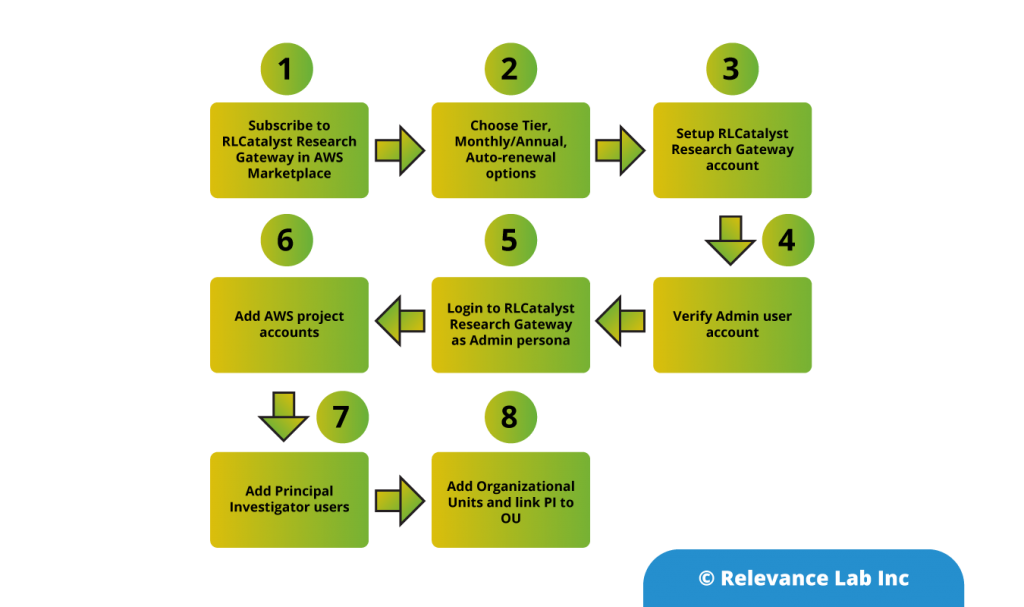

Based on the above ask, the following simple steps have been enabled:

| Steps to Launch | Activity | Total time from Start |

|---|---|---|

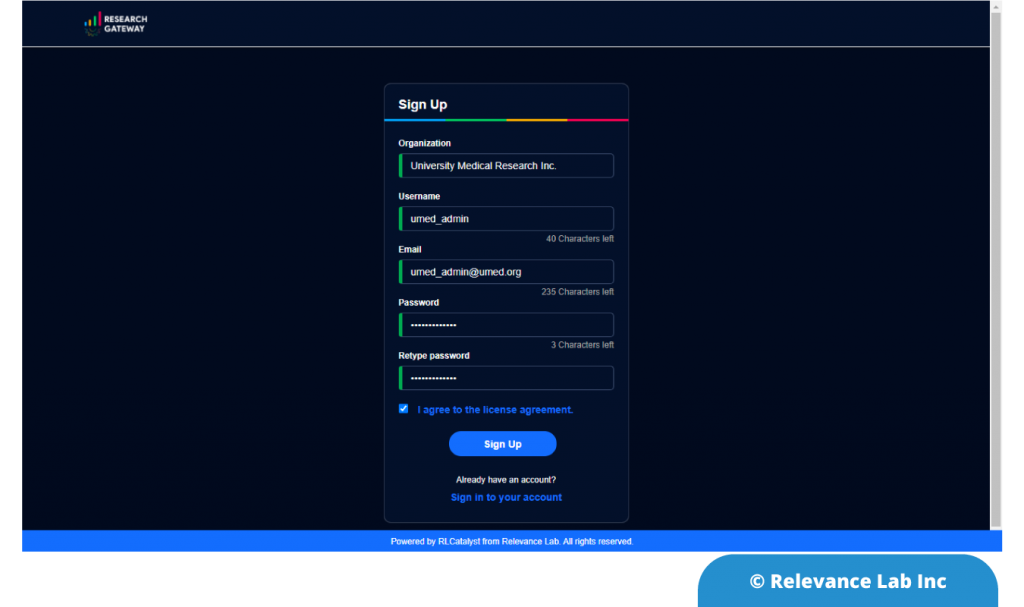

| Step-1 | As a Principal Investigator, create your own “MyResearchCloud” by using your Email ID or Google ID to login the first time on Research Gateway. | 1 min |

| Step-2 | If using a personal email ID, get an activation link and login for the first time with a secure password. | 4 min |

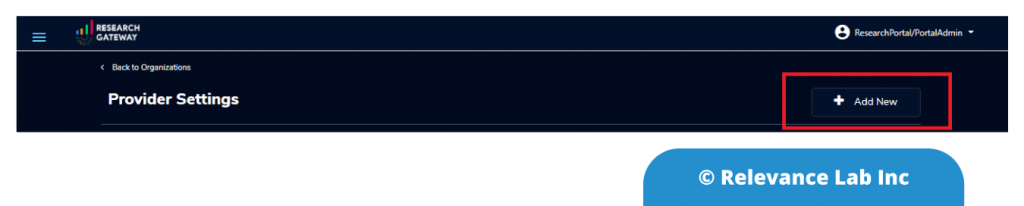

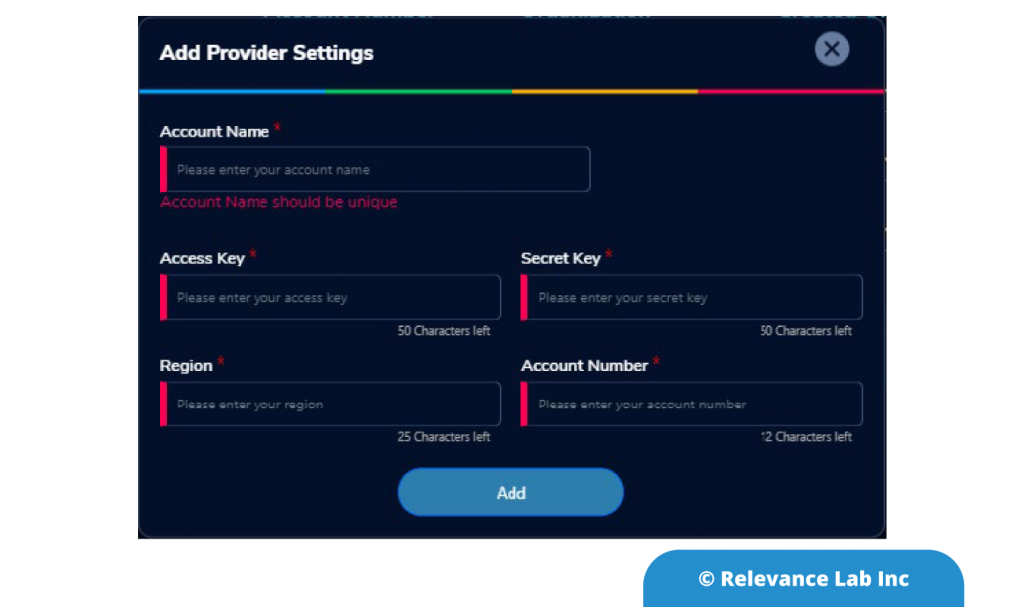

| Step-3 | Use your own AWS account and provide secure credentials for “MyResearchCloud” consumption. | 10 min |

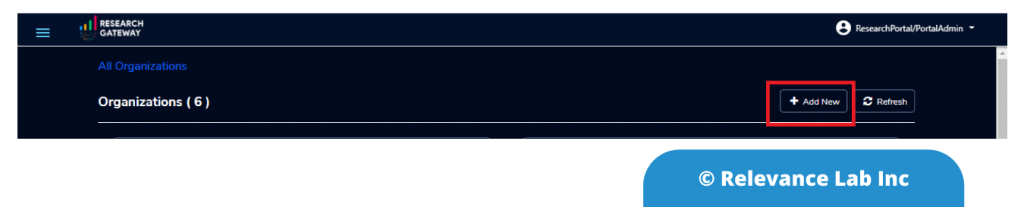

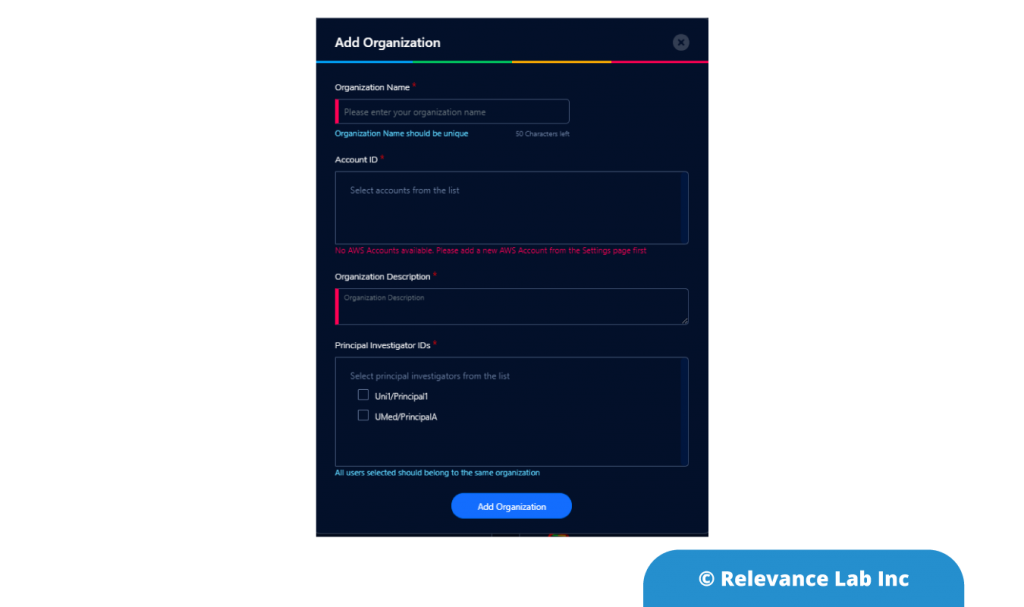

| Step-4 | Create a new Research Project and set up your secure environment with default networking, secure connections, and a standard catalog. You can also leverage your existing setup and catalog. | 13 min |

| Step-5 | Invite new researchers or start using the new setup to order your products to get started with a catalog covering data, compute, analytic tools, and workflow pipeline. | 15 min |

| Step-6 | Order the necessary products – EC2, S3, Sagemaker/RStudio, Nextflow pipelines. Use the Research Gateway to interact with these tools without the need to access AWS Cloud console for PI and Researchers. | 30 min |

The picture below shows the easy way to get started with the new Launchpad and 30 minutes countdown.

Architecture Details

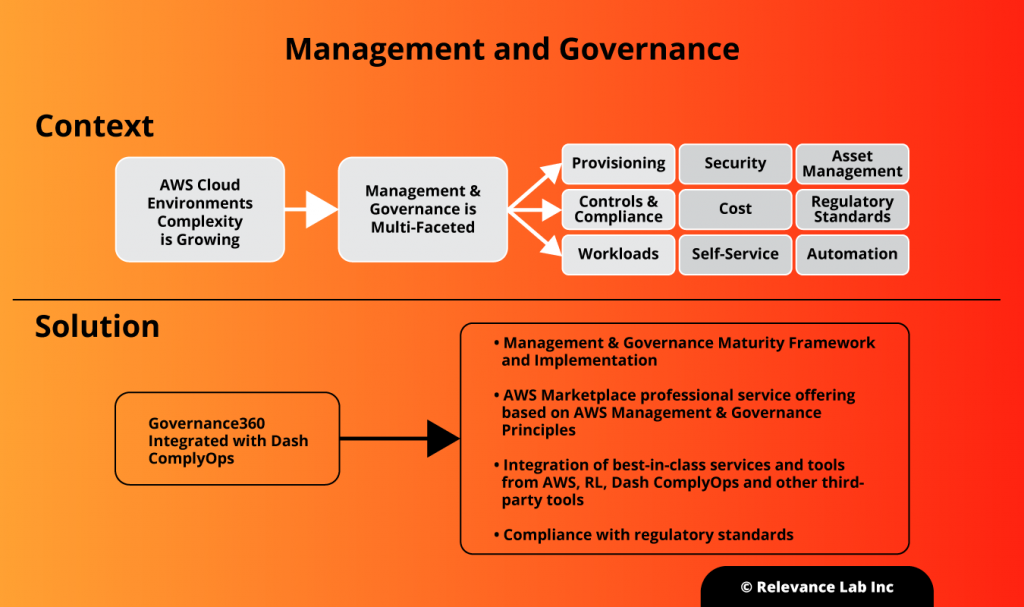

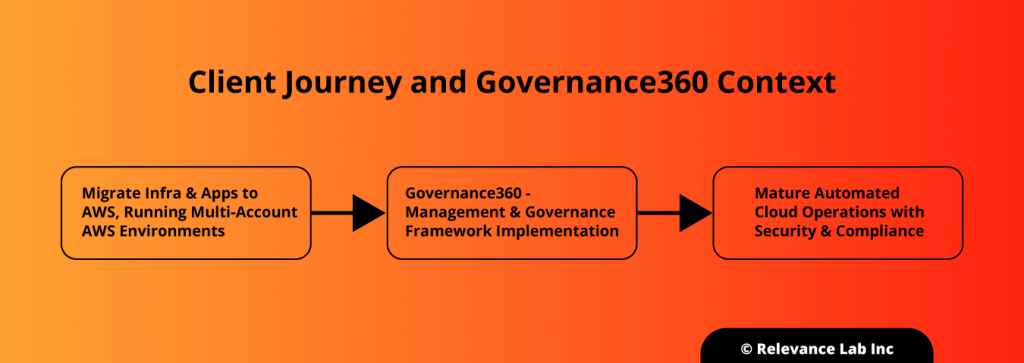

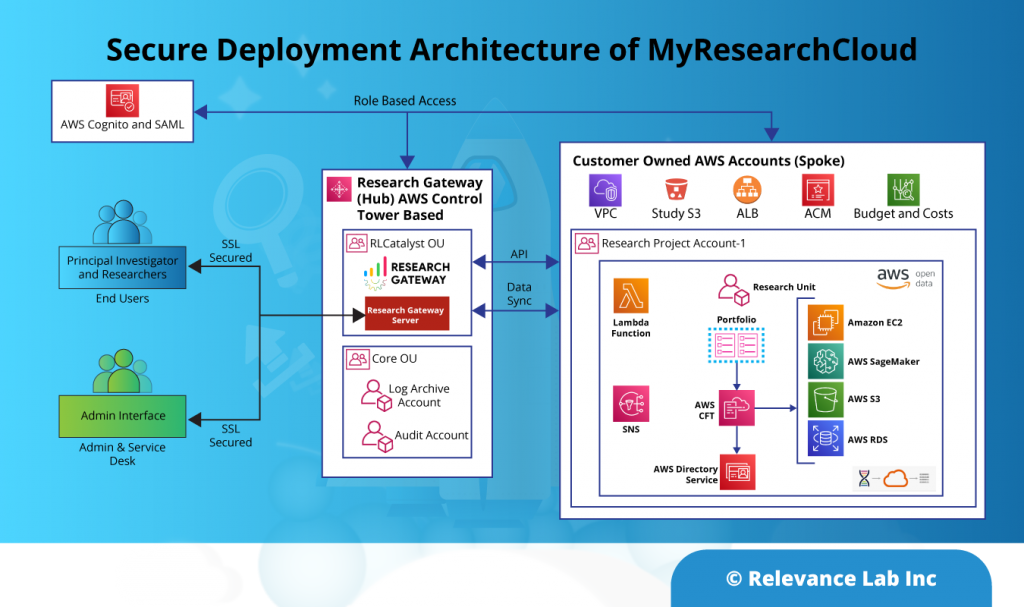

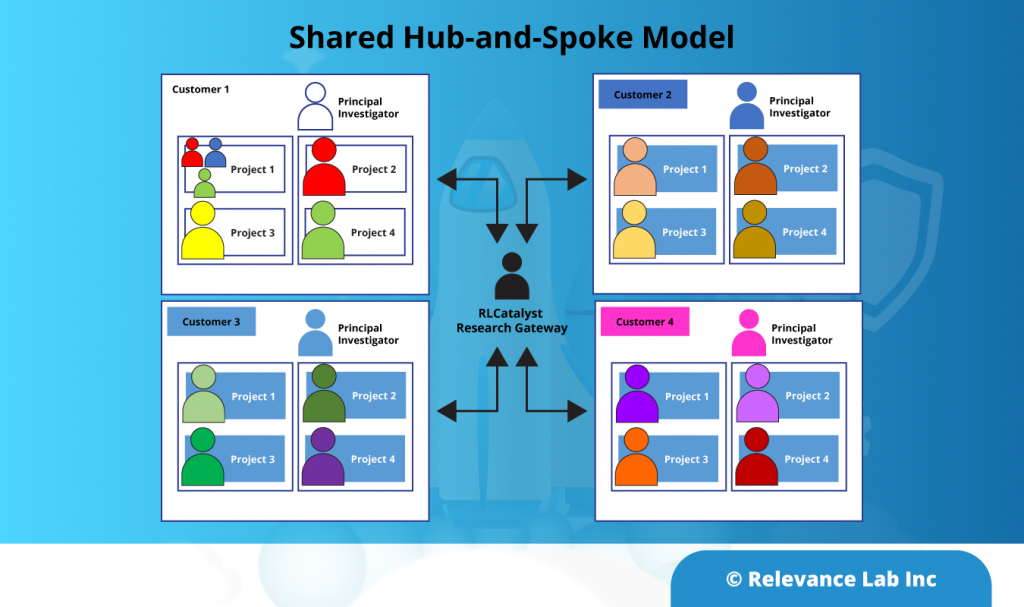

To balance the needs of Speed with Compliance, we have designed a unique model to allow Researchers to “Bring your own License” while leveraging the benefits of SaaS in a unique hybrid approach. Our solution provides a “Gateway” model of hub-and-spoke design where we provide and operate the “Hub” while enabling researchers to connect their own AWS Research accounts as a “Spoke”.

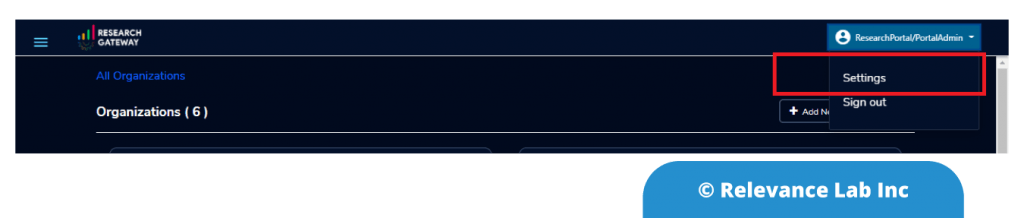

Security is a critical part of the SaaS architecture with a hub-and-spoke model where the Research Gateway is hosted in our AWS account using best practices of Cloud Management & Governance controlled by AWS Control Tower while each tenant is created using AWS security best practices of minimum privileges access and role-based access so that no customer-specific keys or data are maintained in the Research Gateway. The architecture and SaaS product are validated as per AWS ISV Path program for Well-Architected principles and data security best practices.

The following diagram explains in more detail the hub-and-spoke design for the Research Gateway.

This de-coupled design makes it easy to use a Shared Gateway while connecting your own AWS Account for consumption with full control and transparency in billing & tracking. For many small and mid-sized research teams, this is the best balance between using a third-party provider-hosted account and having their own end-to-end setup. This structure is also useful for deploying a hosted solution covering multiple group entities (or conglomerates), typically covering a collaborative network of universities working under a central entity (usually funded by government grants) in large-scale genomics grants programs. For customers who have more specific security and regulatory needs, we do allow both the hub-and-spoke deployment accounts to be self-hosted. The flexible architecture can be suitable for different deployment models.

AWS Services that MyResearchCloud uses for each customer:

| Service Needed for Secure Research | Solution Provided | Run Time Costs for Customers |

|---|---|---|

| Need for DNS-based friendly URL to access MyResearchCloud | SaaS RLCatalyst Research Gateway | No additional costs |

| Secure SSL-based connection to my resources | AWS ACM Certificates used and AWS ALB created for each Project Tenant | AWS ALB implemented smartly to create and delete based on dependent resources to avoid fixed costs |

| Network Design | Default VPC created for new accounts to save users trouble of network setups | No additional costs |

| Security | Role-based access provided to RLCatalyst Research Gateway with no keys stored locally | No additional costs. Users can revoke access to RLCatalyst Research Gateway anytime. |

| IAM Roles | AWS Cognito based model for Hub | No additional costs for customers other than SaaS user-based license |

| AWS Resources Consumption | Directly consumed based on user actions. Smart features are available by default with 15 min auto-stop for idle resources to optimize spends. | Actual usage costs that is also suggested for optimization based on Spot instances for large workloads |

| Research Data Storage | Default S3 created for Projects with the ability to have shared Project Data and also create private Study Data. | Ability to auto-mount storage for compute instances with easy access, backup, and sync with base AWS costs |

| AWS Budgets and Cost Tracking | Each project is configured to track budget vs. actual costs with auto-tagging for researchers. Notification and control to pause or stop consumption when budgets are reached. | No additional costs. |

| Audit Trail | All user actions are tracked in a secure audit trail and are visible to users. | No additional costs |

| Create and use a Standard Catalog of Research Products | Standard Catalog provided and uploaded to new projects. Users can also bring their own catalogs | No additional costs. |

| Data Ingress and Egress for Large Data Sets | Using standard cloud storage and data transfer features, users can sync data to Study buckets. Small set of files can also be uploaded from the UI. | Standard cloud data transfer costs apply |

In our experience, research institutions can enable new groups to use MyResearchCloud with small monthly budgets (starting with US $100 a month) and scale their cloud resources with cost control and optimized spendings.

Summary

With an intent to make Scientific Research in the cloud very easy to access and consume like typical Business to Consumer (B2C) customer experiences, the new “MyResearchCloud” model from Relevance Lab enables this ease of use with the above solution providing flexibility, cost management, and secure collaborations to truly unlock the potential of the cloud. This provides a fully functional workbench for researchers to get started in 30 minutes from a “No-Cloud” to a “Full-Cloud” launch.

If this seems exciting and you would like to know more or try this out, do write to us at marketing@relevancelab.com.

Reference Links

Driving Frictionless Research on AWS Cloud with Self-Service Portal

Leveraging AWS HPC for Accelerating Scientific Research on Cloud

RLCatalyst Research Gateway Built on AWS

Health Informatics and Genomics on AWS with RLCatalyst Research Gateway

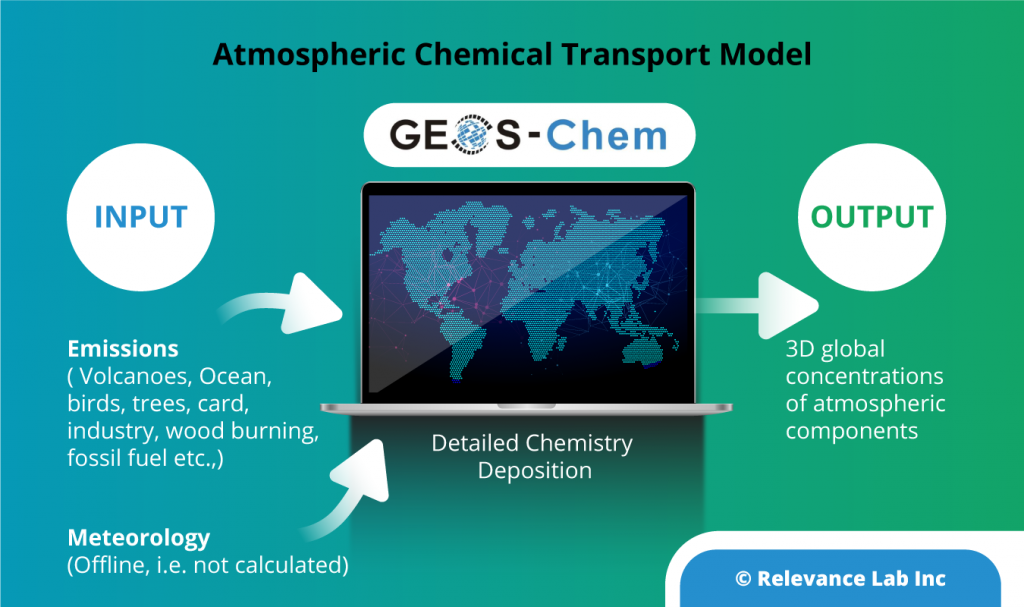

How to speed up the GEOS-Chem Earth Science Research using AWS Cloud?

RLCatalyst Research Gateway Demo

AWS training pathway for researchers and research IT